Dmitry Krotov | Modern Hopfield Networks for Novel Transformer Architectures

Vložit

- čas přidán 9. 05. 2023

- New Technologies in Mathematics Seminar

Speaker: Dmitry Krotov, IBM Research - Cambridge

Title: Modern Hopfield Networks for Novel Transformer Architectures

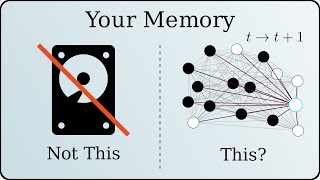

Abstract: Modern Hopfield Networks or Dense Associative Memories are recurrent neural networks with fixed point attractor states that are described by an energy function. In contrast to conventional Hopfield Networks, which were popular in the 1980s, their modern versions have a very large memory storage capacity, which makes them appealing tools for many problems in machine learning and cognitive and neurosciences. In this talk, I will introduce an intuition and a mathematical formulation of this class of models and will give examples of problems in AI that can be tackled using these new ideas. Particularly, I will introduce an architecture called Energy Transformer, which replaces the conventional attention mechanism with a recurrent Dense Associative Memory model. I will explain the theoretical principles behind this architectural choice and show promising empirical results on challenging computer vision and graph network tasks. - Věda a technologie

Excellent talk, very interesting developments with the energy transformer

thanks for sharing

Only geniuses realize the interconnectiveness between the relationship between Hopfield Networks and Neural Network Transformer models then latter Neural Network Cognitive Transmission models.

Ngl, this was pretty confusing.

For one, the two energy formulae at 12:32 are only equivalent if i=j, i.e. if the contribution of each feature neuron is evaluated independently; now, the second formula can be intuitively understood as representing the extent to which the state vector's shape in the latent space matches the shape of each of the memories, but the first formula is harder to conceptualise, and it's never explained how the first formula can be practically reduced to the second (i.e. why not considering the interdependencies between the feature neurons in the energy formula doesn't make a practical difference).

Secondly, without an update rule or at least a labelled HLA diagram, it was really hard to visualise the mechanics of the network; I had to pause the video and google the update rule to understand how dense Hopfield networks are even supposed to work. Dmitry did make the very vague statement that "the evolution of the state vector" is described, in some way, by the attention function, but he didn't explain in what way (is it the update rule? Is it a change vector? Is it something else? What does "V" correspond to? etc), which was pretty frustrating. For anyone watching, the attention function is the update rule where V is a linear transform of K; the value of the attention vector is substituted for Q, and the formula can be applied recursively.

In general, I think more high-level explanations ─ especially within a consistent framework ─ would've been very helpful.

For your first point, this is not true because the square of the sum is not the sum of the squares. There are cross terms which give you the non-independence.