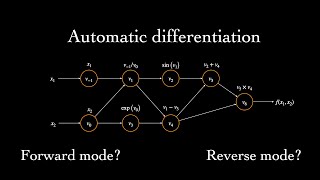

L6.2 Understanding Automatic Differentiation via Computation Graphs

Vložit

- čas přidán 15. 02. 2021

- As previously mentioned, PyTorch can compute gradients automatically for us. In order to do that, it tracks computations via a computation graph, and then when it is time to compute the gradient, it moves backward along the computation graph. Actually, computations graphs are also a helpful concept for learning how differentiation (computing partial derivatives and gradients) work, which is what we are doing in this video.

Slides: sebastianraschka.com/pdf/lect...

-------

This video is part of my Introduction of Deep Learning course.

Next video: • L6.3 Automatic Differe...

The complete playlist: • Intro to Deep Learning...

A handy overview page with links to the materials: sebastianraschka.com/blog/202...

-------

If you want to be notified about future videos, please consider subscribing to my channel: / sebastianraschka - Věda a technologie

Thank you very much for this simplified explanation, i've been struggling to understand it until i found this master piece.

Nice, glad to hear that this was useful!!

thaaaank you, finaly I understand this perfectly (& can know repeat it for myself)

explaining backpropagation my lovely proffs always said "then this is just the chainrule" & skipped any explanation

for calculating (complicated) toy examples I knew the chainrule, but in the backprop context it was just to confusing

________Anway, got a question: at 12:23 you said tehcnicaly canceling the delta terms is not allowed -> could you elaborate on the math/why or point me to some ressourece explaining this ?

Intuitively I always thought canceling delta´s is strange/unformal but I dont found out how this delta notation stuff fits into "normal" math notation :)

Nice, I am really glad to hear that! And yes, I totally agree. When I first learned it, it was also very confusing at first because the prof tried to brush it aside ("it's just calculus and the chain rule") just like you described!

Love your textbooks and your videos. Thank you!

thanks for pulling up this video.

Thank you very much!

YOU ARE GOATED!

17:27 In the formula on the top-left (as I understood) there is no sum, but stacking (or concatenating), then why should we add the results in different paths during the backward chain computation? Is it always work like this - just produce the sum in the chain when there is a concatenating????

Sorry if this was misleading. In the upper left corner, this was more like a function notation to highlight the function arguments. Like if you have a function L that computes x^2 + y^2, then it's basically like writing L(x, y) = x^2 + y^2. There is no concatenation. With the square brackets I meant to show that sigma_3 contains also function arguments. I just used square brackets (instead of round brackets) so it is easier to read, but now I can see how this can be confusing.

@@SebastianRaschka Thank you very much.

At 11:27 it gets confusing because you switch the terms around. Otherwise, very nice video.