Deep Dive on PyTorch Quantization - Chris Gottbrath

Vložit

- čas přidán 26. 06. 2024

- Learn more: pytorch.org/docs/stable/quant...

It’s important to make efficient use of both server-side and on-device compute resources when developing machine learning applications. To support more efficient deployment on servers and edge devices, PyTorch added a support for model quantization using the familiar eager mode Python API.

Quantization leverages 8bit integer (int8) instructions to reduce the model size and run the inference faster (reduced latency) and can be the difference between a model achieving quality of service goals or even fitting into the resources available on a mobile device. Even when resources aren’t quite so constrained it may enable you to deploy a larger and more accurate model. Quantization is available in PyTorch starting in version 1.3 and with the release of PyTorch 1.4 we published quantized models for ResNet, ResNext, MobileNetV2, GoogleNet, InceptionV3 and ShuffleNetV2 in the PyTorch torchvision 0.5 library. - Věda a technologie

*My takeaways:*

*0. Outline of this talk **0:51*

*1. Motivation **1:42*

- DNNs are very computationally intensive

- Datacenter power consumption is doubling every year

- Number of edge devices is growing fast, and lots of these devices are resource-constrained

*2. Quantization basics **5:27*

*3. PyTorch quantization **10:54*

*3.1 Workflows **17:21*

*3.2 Post training dynamic quantization **21:31*

- Quantize weights at design time

- Quantize activations (and choose their scaling factor) at runtime

- No extra data are required

- Suitable for LSTMs/transformers, and MLPs with small batch size

- 2x faster computing, 4x less memory

- Easy to do, use a 1-line API

*3.3 Post training static quantization **23:57*

- Quantize both weights and activations at design time

- Extra data are needed for calibration (i.e. find scaling factor)

- Suitable for CNNs

- 1.5-2x faster computing, 4x less memory

- Steps: 1. Modify model 25:55 2. Prepare and calibration 27:45 3. Convert 31:34 4. Deploy 32:59

*3.4 Quantization aware training **34:00*

- Make the weights "more quantizable" through training and fine-tuning

- Steps: 1. Modify model 36:43 2. Prepare and train 37:28

*3.5 Example models **39:26*

*4. New in PyTorch 1.6*

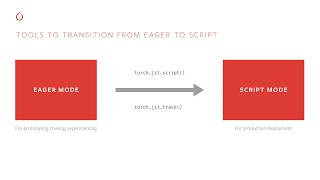

4.1 Graph mode quantization 45:14

4.2 Numeric suite 48:17: tools to aid debugging accuracy drops due to quantization at layer-by-layer level

*5. Framework support, CPU (x86, Arm) backends support **49:46*

*6. Resources to know more **50:52*

This is gold, thank you!

@@lorenzodemarinis2603 You are welcome!

Thank you very much!

@@harshr1831 You are welcome!

MVP!

Awesome talk, thanks!

Too much to ask, but it would be nice if Pytorch had a tool to convert quantized tensors parameters to TensorRT calibration tables

How to test the model after quantization?

I am using post training static quant

How to prepare the input to feed in this model

In the accuracy results, how come there is a difference in inference speed up between QAT and PTQ? Is this because of the different models used? because i would expect no differences in speed up if the same model was used

Awesome talk, thank you so much.

what if want to fuse multiple conv and relu.

sorry, can you share the example code? Thank you

Please take a look at the pytorch tutorials page for example code: pytorch.org/tutorials/advanced/static_quantization_tutorial.html

Why not go lower than 8 bit int for quantization? Won't that be much more speedier?

Currently kernels on processors do not provide any speedup for lower bit precision

Tade off between accuracy and speed

great info but please buy a pop filter.

Then I am the second.

And then I am the third

First view

Lol

OMG. We already have a term of the art for "zero point." It's called bias. We have a term, please use it. Otherwise, thanks for the great talk.

The reason it's called a zero_point is so that when pre-quantized weights bring the output to zero (for RELU activation), you want to add a zero_point so that quantized weights also bring the output to zero. Also the naming of scale and zero_point distinguishes themselves from the naming of each module's weights and bias, which are different concepts