Quantization vs Pruning vs Distillation: Optimizing NNs for Inference

Vložit

- čas přidán 29. 06. 2024

- Four techniques to optimize the speed of your model's inference process:

0:38 - Quantization

5:59 - Pruning

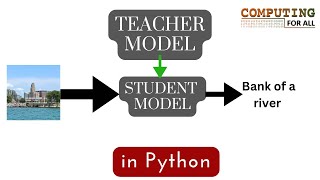

9:48 - Knowledge Distillation

13:00 - Engineering Optimizations

References:

LLM Inference Optimization blog post: lilianweng.github.io/posts/20...

How to deploy your deep learning project on a budget: luckytoilet.wordpress.com/202...

Efficient deep learning survey paper: arxiv.org/abs/2106.08962

SparseDNN: arxiv.org/abs/2101.07948 - Věda a technologie

This was one of the best explanation videos I have ever seen! Well structured and right complexity grade to follow without getting a headache. 👌

that was really nicely done. as a non-expert, I feel like I can now have a great general idea of what a quantized model is. thank you

Superb

Great format, succinctness, and diagrams. Thank you!

Excellent video. Well spoken. Nice visualizations.

wonderfully explained !!

Thanks for the video.

This felt very nicely taught -- I loved that you pulled back a summary/review at the end of the video - great practice. Please continue, thank you!

Great content, well done. Please make a video for ONNX, and another one for Flash Attention. Appreciate.

Fantastic introduction and explanation !

Great video

Awesome video!

What a great video! Thank you!

your teaches so excellent.. we accepted many more videos from your side to understand for the fundamental NLP

^

Great summary, thank you.

Thanks for this!

nice video

nicely done

Excellent video, learnt a lot! However, the definition of zero-point quantization is off. What you're showing in the video is the abs-max quantization instead.

The example I showed is zero-point quantization because 0 in the original domain is mapped to 0 in the quantized domain (before transforming to unsigned). In abs-max (not covered in this video), the maximum in the original domain would be mapped to 127, and the minimum would be mapped to -128.

And if one was to quantize a distilled model? Is the outcome any good?

Yes, these two techniques are often used together to improve efficiency.

The explanation for distillation remains at the surface, it is not enough to understand it

If you have any specific questions I’ll try to answer them!