A shallow grip on neural networks (What is the "universal approximation theorem"?)

Vložit

- čas přidán 31. 05. 2024

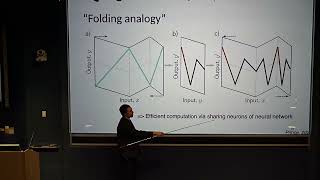

- The "universal approximation theorem" is a catch-all term for a bunch of theorems regarding the ability of the class of neural networks to approximate arbitrary continuous functions. How exactly (or approximately) can we go about doing so? Fortunately, the proof of one of the earliest versions of this theorem comes with an "algorithm" (more or less) for approximating a given continuous function to whatever precision you want.

(I have never formally studied neural networks.... is it obvious? 👉👈)

The original manga:

[LLPS92] M. Leshno, V.Y. Lin, A. Pinkus, S. Schocken, 1993. Multilayer feedforward networks with a non-polynomial activation function can approximate any function. Neural Networks, 6(6):861--867.

________________

Timestamps:

00:00 - Intro (ABCs)

01:08 - What is a neural network?

02:37 - Universal Approximation Theorem

03:37 - Polynomial approximations

04:26 - Why neural networks?

05:00 - How to approximate a continuous function

05:55 - Step 1 - Monomials

07:07 - Step 2 - Polynomials

07:33 - Step 3 - Multivariable polynomials (buckle your britches)

09:35 - Step 4 - Multivariable continuous functions

09:47 - Step 5 - Vector-valued continuous functions

10:20 - Thx 4 watching - Věda a technologie

![[柴犬ASMR]曼玉Manyu&小白Bai 毛发护理Spa asmr](http://i.ytimg.com/vi/0TsXQ7z2Dh4/mqdefault.jpg)

It’s t-22 hours until my econometrics final, I have been studying my ass off, I’m tired, I have no idea what this video is even talking about, I’m hungry and a little scared.

Did you pass? (Did the universal approx thm help?)

@@SheafificationOfGusing this theorem he could create a neural network that approximates test answers to an arbitrarily good degree, thus getting an A-.

Came for the universal approximation theorem, stayed for the humor (after the first pump up I didn't understand a word). Great video!

5:07 phew, this channel is gold. Basic enough that I understand whats going on as an applied ML engineer, and smart enough that I feel like I would learn something. Subscribed.

super underrated channel

as someone who is really interested in pure maths, i think that youtube should really have more videos like these, keep it up!

Happy to do my part 🫡

I have no idea what I just watched

Did not expect to see a Jim's Big Ego reference here

To 6:20. The secant line approximation converges at least pointwise. But for the theorem we want to construct uniform/sup-norm convergence, and I don't see why that holds for the secant approximation.

Good catch!

The secret sauce here is that we're using a smooth activation function, and we're only approximating the function over a closed interval.

For a smooth function f(x), the absolute difference between df/dx at x and a secant line approximation (of width h) is bounded by M*h/2, where M is a bound on the absolute value of the second derivative of f(x) between x and x+h [this falls out of the Lagrange form of the error in Taylor's Theorem]. If x is restricted to a closed interval, we can choose the absolute bound M of the second derivative to be independent of x (and h, if h is bounded), and this gives us a uniform bound on convergence of the secant lines.

I don't think I'm part of the target group for this video ( i have no idea what the fuck you are talking about) but it was still entertaining and allowed me to feel smart whenever I was able to make sense of anything ( I know what f(x) means) so have a like and a comment, and good luck with your future math endeavors!!

Haha thanks, I really appreciate the support! The fact that you watched it makes you part of the target group.

Exposure therapy is a pretty powerful secret ingredient in math.

@6:22 missed opportunity for the canonical recall from gradeschool joke

😔

This is by far the math channel with the best jokes. Sadly, I don't know any Chinese, so I couldn't figure out who 丩的層化 is. Best any translator would give me was "Stratification of ???"...

Haha, thanks!

層化 can mean "sheafification"

and ㄐ is zhuyin for the sound "ji"

I suppose I can show the last equality of 9:04 using induction on monomial operators?

Yep! Linearity of differentiation allows you to assume q is just a k-th order mixed partial derivative, and then you can proceed by induction on k.

Great content

Can you share the pdf of the notes you show in the video?

If you're talking about the source of the proof I presented, the paper is in the description:

M. Leshno, V.Y. Lin, A. Pinkus, S. Schocken, 1993. Multilayer feedforward networks with a non-polynomial activation function can approximate any function. Neural Networks, 6(6):861--867.

If you're talking about the rest, I actually just generated LaTeX images containing specifically what I presented; they didn't come from a complete document.

I might *write* such documents down the road for my videos, but that's heavily dependent on disposable time I have, and general demand.

@@SheafificationOfG then demand there is. I love well written explanations to read not see

@@korigamik I'll keep debating writing up supplementary material for my videos (though I don't want to make promises). In the meantime, though, I highly recommend reading the reference I cited: it's quite well-written (and, of course, the argument is more complete).

Any videos coming about Kolmogorov Arnold networks?

I didn't know about those prior to reading your comment, but they look really interesting! Might be able to put something together in the future; stay tuned.

Okay but has the manga good application? Does it train faster or something?😊

(Please help me I like mathing but world is corrupting me with its engineering)

The manga is an enjoyable read (though it's an old paper), but it doesn't say anything about how well neural networks train; it's only concerned with the capacity of shallow neural networks in approximating continuous functions (that we already "know" and aren't trying to "learn"). In particular, it says nothing about training a neural network with tune-able parameters (and fixed size).

(I feel your pain, though; that's what brought me to make a channel!)

@@SheafificationOfG Fair enough. I'll still give it a read.

Also, thanks for the content; it felt like fresh air watching high level maths with comedy; I shall therefore use the successor function on your sub count.

your linguistic articulation is extremely specific and 🤌🤌🤌

banger vid

thanks fam