Posterior Predictive Distribution - Proper Bayesian Treatment!

Vložit

- čas přidán 19. 06. 2021

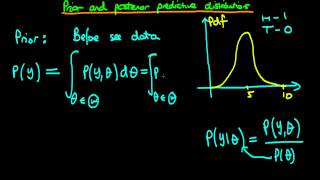

- This is part 5 of this series in which I explain one of the most important constructs and targets of Bayesian modeling and inference - the Posterior Predictive Distribution.

The tutorial provides both the intuition as well as shows its formulation step by step.

The content and example used are based and inspired from chapter 1 of Dr. Bishop's book.

Colab notebook that has the example used in the tutorial

colab.research.google.com/dri...

PRML - (available for free, thanks to Dr. Bishop) - www.microsoft.com/en-us/resea... - Věda a technologie

This series has a wealth of knowledge on Bayesian stats/regression. It's worth spending time wrapping your head around it.

🙏

Thanks. This helps a lot. please keep doing such great tutorials.

🙏

This series is one of the best explanations I have seen on Bayesian regression. Thank you!

🙏

Wonderful explanation 🙏🙏🙏🙏

🙏

Thank you so much Kapil! That was very helpful!

🙏

This is a gem among gems. I finally understand the motivation behind bayesian inference. Thank you so much for these amazing tutorials! I am really grateful for to you for sharing your expertise in this field!

🙏

Unbelievable how clear this is. I wish the book would just paraphrase _exactly_ what you explain here. Why do books have to be formal and obfuscating? Why not just say what you say here?

🙏 Thanks for the kind words

This lecture is amazing! A quick question around 19:50 when looking to use the product and sum rules to expand p(t',W|x,X,t) I expanded it as p(W|t'x,X,t)p(t'|x,X,t). The intuition behind this is as follows: assume p(t',W) this expands to p(W|t')p(t') now applying the condition over x,X,t it becomes p(W|t',x,X,t)p(t'|x,X,t). Intuitively I understand that W is independent of t' and x so those two are removed which makes it p(W|X,t) and similarly t' depends on W,x and independent of the dataset hence they are removed resulting in p(t'|W,x). But I just wanted to confirm is this intuition right especially where I am writing p(t'|x,X,t) as p(t'|x,W). Like just introducing W as a condition over t' based on intuition is that the correct reasoning or am I missing something.

If you think in terms of first expanding p(t’,W) to p(W)p(t’|W) instead of p(W|t’)p(t’) you will see that the rest of ur conditioning and adjustments will make more sense.

Here is how I generally think about expanding joint probs.

Given two events - A and B

The joint prob will be p(A,B)

According to product rule the joint prob could expand to either p(A)p(B|A) or p(B)p(A|B).

Now we read these as follows … for the first possibility ie p(A)p(B|A) we say I have the prob of A and since A has already occurred I must take that as a condition of B for the second term.

Now when we apply this kind of thinking on joint prob of p(t’, W) … we could argue that W has occurred first or was determined first before the new data t’ and hence the conditional prob should be for t’

Now you can apply your conditions over x,X,t and you then do not have to make an argument that W is independent of t’.

(Writing this comment on phone, hopefully not making mistakes with notations ;) )

@@KapilSachdeva ahh! I see this makes sense. Thank you so much! After a lot of effort managed to finish the first chapter. Heading to the next one!

🙏 I really admire that u want to understand all the details. Don’t dishearten if in a moment things appear to be hard; keep at it and this all will definitely pay up later. Good luck!

@@KapilSachdeva Thank you sir!

Thank you, this series is really amazing especially with the intuitive explanations. I just wanted to clarify something at 18:51. When you refer to posterior probability, it means the likelihood distribution right? As the posterior is weighted by the likelihood distribution to give the posterior predictive distribution.

The weighing is done with the help of posterior distribution. The second term in the integral (expectation) is the distribution whose values you use to weigh. When I say "posterior probability" it means that probability of w sampled from posterior distribution.

In the expectation formulation, the first term is function of random variable. The second term is the probability distribution from which we get a sample/instance of the random variable. This sample/instance is passed as a parameter in the function of random variable (i.e. first term) and we also obtain the probability of this sample. We then multiply the output of function of RV and the probability of the sample.

If still some confusion, please watch first few minutes of my tutorial on importance sampling (czcams.com/video/ivBtpzHcvpg/video.html) in which I explain the expectation formulation conventions in bit more detail.

Hope this helps.

@@KapilSachdeva Thank you for the explanation, that was clear :)

🙏

Thank you very much. Excellent presentation of the material. Very simple, with clear images that are simple to remember.

I have a question at 19:57, when it says:

p(t,w'|x,x',t') = p(t|x,w',x',t')p(w'|x',t')

However, if I break down the probability distribution p(t,w'|x,x't'), I get:

p(t,w'|x,x',t') = p(t|x,w',x',t')p(w'|x,x',t')

Should p(w'|x',t') and p(w'|x,x',t') be equal?

I am trying to relate what you said at 18:47 ..in this way.. Lets say we have theree independent variables X1,X2,X3 and one dependent variable Y..so we will have three different weight parameters(w1,w2,w3) corresponding to X1,x2,x3. Each of the weight parameters will have its own distribution. Now for a new observation i, in order to find the Y for the ith observation...we will take each value of w1, w2, w3 and pass it to likelihood function..one at a time and then repeat this process until we pass all the possible values of w1, w2, w3 to the likelihood function...finally we will add everything to arrive at the posterior predictive distribution...is my understanding correct? Also will the likelihood function always be the sum of squared error function?

Your understanding is correct. Good job!

Just to make sure, if right hand side of the equation is an definite integral from -inf to inf I would get expected value (single point estimate) for the predicted value but if It's indefinite integral I would get a PDF of t?

Sorry not sure if I understand your question. Could you please rephrase/elaborate?

@@KapilSachdeva my question is what exactly is posterior predictive, is it a point estimate or probability density function? because when you talk about it as marginalization, integral is indefine and when you talk about it from expected value perspective it's definite integral (deducing from this R subscript)

Posterior predictive is a distribution.

In Bayesian philosophy, we do not work with 1 statistic (mean, mode etc). You construct this posterior prediction distribution and then use the samples (with their respective probability) from it to get the expected value.

@@KapilSachdeva thank you for answering

Do you have a video showing the derivation of the equation (1.69) from (1.68) in 22:34

I do not have video for that but you might be able to find the steps on internet somewhere. I have vague collection that I have seen the simplification of two normal pdfs somewhere.

Can you wait few days? I will try to see if I can find it over the weekend.

@@KapilSachdeva sure no problem!

Where can I see the previous parts 1to 4? thank you

czcams.com/play/PLivJwLo9VCUISiuiRsbm5xalMbIwOHOOn.html