Tutorial 13- Global Minima and Local Minima in Depth Understanding

Vložit

- čas přidán 28. 07. 2019

- In mathematical analysis, the maxima and minima (the respective plurals of maximum and minimum) of a function, known collectively as extrema (the plural of extremum), are the largest and smallest value of the function, either within a given range (the local or relative extrema) or on the entire domain of a function (the global or absolute extrema)Pierre de Fermat was one of the first mathematicians to propose a general technique, adequality, for finding the maxima and minima of functions.

Below are the various playlist created on ML,Data Science and Deep Learning. Please subscribe and support the channel. Happy Learning!

Deep Learning Playlist: • Tutorial 1- Introducti...

Data Science Projects playlist: • Generative Adversarial...

NLP playlist: • Natural Language Proce...

Statistics Playlist: • Population vs Sample i...

Feature Engineering playlist: • Feature Engineering in...

Computer Vision playlist: • OpenCV Installation | ...

Data Science Interview Question playlist: • Complete Life Cycle of...

You can buy my book on Finance with Machine Learning and Deep Learning from the below url

amazon url: www.amazon.in/Hands-Python-Fi...

🙏🙏🙏🙏🙏🙏🙏🙏

YOU JUST NEED TO DO

3 THINGS to support my channel

LIKE

SHARE

&

SUBSCRIBE

TO MY CZcams CHANNEL

Am self studying machine learning. Really your videos are amazing to get the full overview quickly and even a layman can understand.

Stopped this video halfway through to say thank you! Your grasp on the topic is outstanding and your way of demonstration is impeccable. Now resuming the video!

your videos are like a suspense movie. need to watch another, need to see till the final playlist.. so much time to spend to know the final result.

You making people fall in love with Deep learning.

Krish bhaiya, you are just awesome. Thanks for all that you are doing for us.

you are a blessing for new students sir..God's gift to we students

You are awesome... One of the gems in this field who making others life simpler.

amazing. simple, short & crisp

Very very amazing explanation thanks a lot!!!

Hi krish,your all video are too good.But do some practicle example on those videos so we can understand how to implement it practically.

Yes, adding how to implement will make this series more helpful.

Hi Krish, Please make a playlist of practical implementation of these theoretical concepts

You are always awesome! Thanks Krish Naik

Hi Krish. Thanks a lot for your videos. You make me fell love with DL❤️ I took many introductory courses in coursera and udemy from which I couldn't understand all the concepts. You're videos are just amazing. One request, could you please make some practical implementations of the concepts so that it would be easy for us to understand in practical problems.

Ultimate explanation, thanks Krish

Nice Explanation Krish Sir ...

Thank you, Krish sir. Good explanation.

very impressive explanation. Now I total adapt to India English. So wonderful

i like this video you explained this very well! thank you!

this is sooo easily understandable sir.. Im sooo lucky to find you here.. thanks a ton for these valuable lessons sir.. keep shining..

Very nice explanation

Awesome...

Awesome!

Never Skip Calculus Class.

Nice explanation krish sir ..........

I don't think if the derivative of loss function for calculating new weights should be used as when equal to zero it makes the weights for the neural networks to W(new) = W(old). It would be related to vanishing gradient problem. Isn't it like the derivative of loss function for the output of neural network used where the y actual and y hat becomes approximately equal and the weights are optimised iteratively. Please make me correct if I'm wrong.

very nice

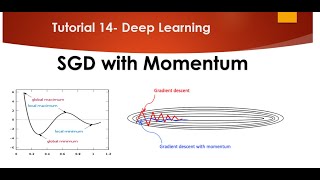

You have mentioned in previous video that you will talk about Momentum in this video but i am yet to hear....

Hi Krish, very informative as always. Thank you so much. Can you pls also do a tutorial on Fokker Planck equation...Thanks alot in advance...

Hi Krish, .That was also a great video in terms of understandingPlease make a playlist of practical implementation of these theoretical concepts.Then please download the ipynb notebook just below so that we can practice it in jupyter notbook

at global minima if the deriavtive of the loss function wrt w becomes 0 then wold=wnew and lead to no change in value then how the loss function value be reduced?

nice explanation Sir

Thanks Krish

what if i have a decrease form 8 to infinity, would the lowest visible point still be my global minima?

I think, at local minima the "∂L/∂w" is not = 0, bcz the ANN output is not equal to the required output. if I am wrong please correct me

Hello Krish,

Thanks for the amazing work you are doing.

Quick one: you have talked about the derivative being zero when updating the weights...so how do you tell it's a global minima and not the vanishing GD problem?

U will check for the slope, let say you are getting started from negative slope, that means weights are getting decreased, now after reaching zero if it changes to positive, that means you got ur minima. As for vanishing it will keep decreasing only. Correct me @anyone if I'm wrong.

nice

krish bro when the w new and w old are equal then that will be forming the vanishing gradient decent right??

no bro vanishing gradient is a problem that occurs in chain rule when we use sigmoid or tanh to overcome that problem we use the ReLu activation function

Hi Krish,when the slope is zero at local maxima why don’t we consider local/global maxima instead of minima

why do Neurons need to get converge at global minima ?

Neurons dont. Weights converge to some values and those values represent the point at which the loss functions is at its minimum. Our goal here is to formulate some loss function and to find the weights or parameters that optimize, minimize, that loss function. Because if we don't optimize it, then our function won't learn any input-output relationship. It wont know what to predict when given a set of inputs.

Also I think when he said neurons converge at the end, he meant parameters of a neuron not the value of a neuron itself.

If at global minima w'new is equal to w'old ,what is point of reaching there ?? am I missing something?? @krish naik

after that point slope increases or decreses

The whole point is to reach the global minima only... Because at global minima you get W and at that W you'll get minimum loss..

Why do we need to minimize cost function in machine learning, what's the purpose of this? Yeah, I understand that there will be less erorrs etc., but I need to understand it from fundamental perspective. Why don't we use global maximum for example?

You minimise the error of your prediction, maxima means the point where error function is highest.

How do we deal with local maxima I am still not clear

Look for simulated annealing... you will get your answer. There are definitely many other methods, but I know this one.