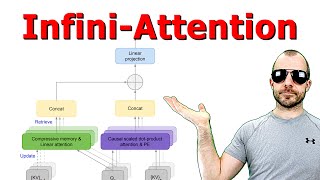

Google just Solved the Context Window Challenge for Language Models ?

Vložit

- čas přidán 25. 06. 2024

- References:

►Read the full article: www.louisbouchard.ai/infini-a...

►Twitter: / whats_ai

►My Newsletter (My AI updates and news clearly explained): louisbouchard.substack.com/

►Support me on Patreon: / whatsai

►Join Our AI Discord: / discord

How to start in AI/ML - A Complete Guide:

►www.louisbouchard.ai/learnai/

Become a member of the CZcams community, support my work and get a cool Discord role :

/ @whatsai

#ai #google #llm - Věda a technologie

The importance of crafting detailed prompts and knowing exactly how to guide the model to fulfill your specific needs is crucial. So far, I've found that managing prompts personally and disabling any pre-configured instructions yields the best results for my needs

Basically they have built vector database into LLM itself. Allowing it to perform search on its own, inside its own compressed "memory".

Very interesting. It sounds as if Google is taking a dense vector representation for a chat rather than for a token and compressing it through an encoder from a trained auto-encoder for these long chat representations. Then this representation is continuously updated and always passed as context. I've been working on a hierarchical chat history stored in documents processed by RAG, but it does seem to me that some sort of sparse representation is also needed. I was thinking about graphical representations, like an HTM as Jeff Hawkins says may be in cortical columns, but this may be better. I still think the RAG idea is important, however. I've been using gemma:2b since it's very fast for multiple passes.

Great Content

Thank you Ahmod!

can you do more of these where you talk about important papers?

Used to do only that! Yes more are coming when I feel like I can bring something about the paper that others didn’t :)

Original transformer already had an infinite window....

Like... sure u trained a big context window but the idea that it's infinite is weird

Means is google trying to implement lstm main idea in the form of self attention?

Looking for ur reply.

I believe the idea is indeed very similar, and obviously has the same underlying goal! I believe the main difference is that the infini-transformer uses both fixed and variable memory. Meaning that some parts are just more compressed and stay there (older states) and some parts can change with the layers attention process (recent states) vs. In lstm there’s only the latter with gates to decide what content to bring. So I would assume it lacks full context for very very long sequences in the case of LSTMs (well if you want it to be computationally and memory viable). So I’d guess we could day the main achievement here is compression and efficiency for many sequences :)

And of course there’s attention that changes everything here combining current and past sequence tokens and information in a much better way!

That makes sense, that's kinda how the human mind works too.

interesting. what about output token limit?

From what I understand it reuses calculated states across output tokens and generates tokens in a sequence to sequence manner still, so in the end it is also more efficient for generating longer outputs. Doesn’t seem to affect the quantity of output tokens and mainly still depends on compute budget I think. Couldn’t find clear info in the paper unfortunately!

@@WhatsAI thank you for the swift and accurate reply ^___^

sounds like RMSprop + attention

So it seems like a memoization of prior latents? I guess I can see doing that (in effect providing an ongoing summary of the current context window), but it does seem to me that it might make the models somewhat 'hidebound' in it's thinking when dealing with very long windows.

As a summary gets passed along, more and more emphasis is placed on key words and concepts related to a specific context. For example. If we had been chatting for a long time about river banks, and suddenly I mention that I need to go to the Bank, a model with a deep seated prior set might mistake meanings and believe I am talking about going to a river bank. A human can switch contexts very quickly, where models tend towards what could be described as 'Target Fixation'.

IDK. There's a lot to consider here, and a number of ways something like this can be approached. Certainly a lot of food for thought.

🖖😶🌫👍

P.S. I still think backprop of Transfer Functions can be made computationally viable, (I've seen it working), I just don't understand the math and a bit of the code (provided by ChatGPT [just regular, not 4]) well enough to publish and say 'Hey World! Look at this amazing seashell!' (Shoutout to Newton). Still sitting on the edge of when LLMs make me smarter than I actually am 😉

Those are indeed good concerns! I’d assume there’s much more weight assigned to the local attention and the model learns to do that during training thanks to the text and how we communicate, not giving too much value to previous states but sometimes it does. Assuming we have enough data, I guess the model gets a good « understanding » of when to look into the past memory and when not to, haha!

Also, it seems the past info is more and more compressed the farther away it is in the past to limit computation required, so it should not influence the results much if we talk a lot about rivers and switch to an actual bank. Would probably be the same if we talk about rivers for 2 sentences or 20 potentially(?). Could be wrong!

@@WhatsAI Depends on how quickly the a-priori latents are compressed. If there's a drop-off of older memories as new ones come in there's loss.

If newer memories 'overwrite' or overwhelm older memories, there's loss (or potentially corruption).

We've already seen some forms of attacks that slowly corrupt the model into bypassing earlier 'safety' instructions and gaining unrestricted access. Direct attention attacks can't be far away.

Is it just me, or does the world seem to be getting a tiny bit more Cyberpunk everyday?

I don't think he means a long text context that's vectorized. I think he means one that is vectorized and compressed through an encoder.

Daymn

Great content, but these sound effects are terribly disturbing.

Oh no. I might reduce them for more paper- like videos then! Thank you for the feedback!

Can you follow this up with a review of the TransformerFAM method? in Arxiv 14/04/2024

I haven’t seen this paper. I will have a look, thanks for sharing! :)

Starting from 3:30 don't thank me.

Haha. Would you prefer no introduction and quick videos just starting at this point for the particularity of the paper in question (what they bring new without « soft introduction »)?

Trying to introduce for the followers that enjoy learning about new papers but may work in other sub field of ML :)

Breath of fresh air on CZcams