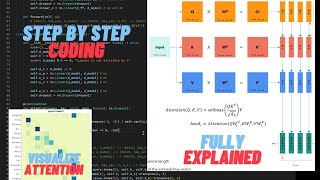

Coding Llama 2 from scratch in PyTorch - Part 3

Vložit

- čas přidán 16. 03. 2024

- In this video series, you will learn how to train and fine-tune Llama 2 model from scrach.

The goal is to code LLaMA 2 from scratch in PyTorch to create models with sizes 100M, 250M and 500M params. In this third video, you'll learn about KV cache, RoPE, and Hugginface Trainer in detail.

📋 KV cache:

- • The KV Cache: Memory U...

🪢 RoPE:

- • Rotary Positional Embe...

- nn.labml.ai/transformers/rope...

🤗 Trainer:

- huggingface.co/docs/transform...

Sebastian Raschka:

- Attention performance: x.com/rasbt/status/1765392800...

- Build a Large Language Model (From Scratch): www.manning.com/books/build-a...

💻 To follow along you can use this colab notebook:

- github.com/Blaizzy/Coding-LLM...

🎥 Coding Llama 2 from scratch video series

Part 1: czcams.com/users/liveXHmag4damTg

Part 2: czcams.com/users/liveLSWDpFmbE90 - Věda a technologie

First time watching your video. Keep going bro 💪, its your friend Afzal

Thank you very much brother! It's been long my friend :)

Really great job!

Thank you very much, Remek!

I’m happy you liked it :)

So in this series, you don't use any pre-trained weights? You build and train the model from scratch on a custom dataset?

@user-vd7im8gc2w

Why do you need position ids?

You use it to map the input ids to their respective position in the sequence.

Example:

input_ids = [100, 20, 4, 50]

position_ids = torch.arange(input_ids.shape…)

print(position_ids)

>> [0, 1, 2, 3]