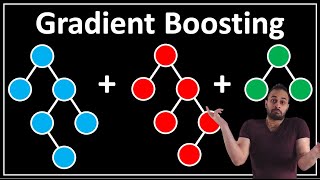

Gradient Boosting and XGBoost in Machine Learning: Easy Explanation for Data Science Interviews

Vložit

- čas přidán 21. 07. 2024

- Questions about Gradient Boosting frequently appear in data science interviews. In this video, I cover what the Gradient Boosting method and XGBoost are, teach you how I would describe the architecture of gradient boosting, and go over some common pros and cons associated with gradient-boosted trees.

🟢Get all my free data science interview resources

www.emmading.com/resources

🟡 Product Case Interview Cheatsheet www.emmading.com/product-case...

🟠 Statistics Interview Cheatsheet www.emmading.com/statistics-i...

🟣 Behavioral Interview Cheatsheet www.emmading.com/behavioral-i...

🔵 Data Science Resume Checklist www.emmading.com/data-science...

✅ We work with Experienced Data Scientists to help them land their next dream jobs. Apply now: www.emmading.com/coaching

// Comment

Got any questions? Something to add?

Write a comment below to chat.

// Let's connect on LinkedIn:

/ emmading001

====================

Contents of this video:

====================

00:00 Introduction

01:01 Gradient Boosting

02:11 Gradient-boosted Trees

02:54 Algorithm

05:53 Hyperparameters

07:55 Pros and Cons

09:00 XGBoost

For 5:10, why the MSE delta r_i is Y-F(X) instead of 2*(Y-F(X))? or is the coefficent doesn't matter?

Beautifully written notes

there is a mistake in the representation of algorithm. the equation for ri, L(Y, F(X)), and grad ri = Y-F(X) can't hold true at the same time. I think ri= Y-F(X) and grade ri should be something else (right?)

Hi! I have a question, how does the parallel tree building work? Because based in the gradient boosting it needs to calculate the error from the previous model in order to create the new one, so I dont really understand in which way is this parallelized

Its parallelized in such a way that , during formation of tree , it can work parallel....means it can work on multiple independent features parellaly to reduce the computation time....suppose if it has to find root node, it has to check information gain of every single independent feature and then decide which feature would be best for root node...so in this case instead of calculating information gain one by one, it can parallely calculate IG of multiple features....

Thank you for the very informative video. It came up at my interview yesterday. I also got a question on time series forecasting and preventing data leakage. I think it would great to have a video about it.

How do you add L1 regularization to a tree???

Hi Emma,

I'm struggling to understand how to build a model on residuals:

1) Do I predict the residuals and then get the mse of the residuals?

What would be the point/use of that?

2) Do I somehow re-run the model considering some factor that

focuses on accounting for more of the variability e.g. adding more

features(important features) which reduce mse/residual?

Then re-running the model adding a new feature to account for

remaining residual until there is no more reduction in mse/residual?

Ask Chat GPT every question you just typed. Preferably GPT-4

It's important to understand what the residual is. The residual is a vector giving a magnitude of the prediction error AND the direction, i.e. the gradient. Thus, regarding your questions:

1) we predict the residual with a weak model, h, in order to know in what direction to move the prediction of the overall model F_i(X) so that it is reduced. We assume h makes a decent prediction, and thus we treat it like the gradient.

2) we then calculate alpha, the regulation parameter, in order to know HOW FAR to move in the direction of the gradient which h provides. I.e., how much weight to give model h. Minimizing the loss function gives us this value, and keeps us from over or undershooting the step size.

Excellent video Many thanks.

Could you kindly make a video for time to event with survival SVM, RSF, or XGBLC?

An excellent video

is there a way to get the notion notes?

I usually watch Emma's video when I doing revision.

I am confused about the notation, so h_i is a function to predict r_i and r_i is the gradient of the loss function w.r.t the last prediction F(X). so h_i should be similar to r_i why h_i is similar to gradient of r_i

I believe there is an error in this video. r_i is the gradient of the loss function w.r.t. the CURRENT F(X), i.e. F_i(X). The NEXT weak model h_i+1 is then trained to be able to predict r_i, the PREVIOUS residual. Alternatively all this could be written with i-1 instead of i, and i instead of i+1.

TLDR: Emma should have called the first step "compute residual r_i-1", not r_i. And in the gradient formula, she should have written r_i-1.

Really like the way you use Notion!

Thanks for the feedback, Leo! I tried out a bunch of different presentation methods before this one, so I'm glad to hear you're finding this platform useful! 😊

Many of you have asked me to share my presentation notes, and now… I have them for you! Download all the PDFs of my Notion pages at www.emmading.com/get-all-my-free-resources. Enjoy!

Thanks a lot. Can you please make a video on Time Series Analysis? Thanks in Advance!

Okay subscribed !

Hallo Miss, thankyou for the knowledge, Miss can I request your file in this presentation ?

just read out loud, no explanation at all

Any chance to have slides?

Agree. Hope to have that note

Yes! Download all the PDFs of my Notion pages at emmading.com/resources by navigating to the individual posts. Enjoy!

She just read the text with zero knowledge about the content. U no good.