Zillow Data Analytics (RapidAPI) | End-To-End Python ETL Pipeline | Data Engineering Project |Part 1

Vložit

- čas přidán 11. 09. 2024

- This is the part 1 of this Zillow data analytics end-to-end data engineering project.

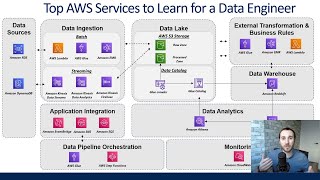

In this data engineering project, we will learn how to build and automate a python ETL process that would extract real estate properties data from Zillow Rapid API, loads it unto amazon s3 bucket which then triggers a series of lambda functions which then ultimately transforms the data, converts into a csv file format and load the data into another S3 bucket using Apache Airflow. Apache airflow will utilize an S3KeySensor operator to monitor if the transformed data has been uploaded into the aws S3 bucket before attempting to load the data into an amazon redshift.

After the data is loaded into aws redshift, then we will connect amazon quicksight to the redshift cluster to then visualize the Zillow (rapid data) data.

Apache Airflow is an open-source platform used for orchestrating and scheduling workflows of tasks and data pipelines. This project will entirely be carried out on AWS cloud platform.

In this video I will show you how to install Apache airflow from scratch and schedule your ETL pipeline. I will also show you how to use sensor in your ETL pipeline. In addition, I will show you how to setup aws lambda function from scratch, set up aws redshift and aws quicksight.

As this is a hands-on project, I highly encourage you to first watch the video in its entirety without typing along so that you can better understand the concepts and the workflows after which you should either try to replicate the example I showed without watching the video but consult the video when you are stuck or you could watch the video again the second time in its entirety while also typing along this time.

Remember the best way to learn is by doing it yourself - Get your hands dirty!

If you have any questions or comments, please leave them in the comment section below.

Please don’t forget to LIKE, SHARE, COMMENT and SUBSCRIBE to our channel for more AWESOME videos.

**************** Commands used in this video ****************

sudo apt update

sudo apt install python3-pip

sudo apt install python3.10-venv

python3 -m venv endtoendyoutube_venv

source endtoendyoutube_venv/bin/activate

pip install --upgrade awscli

sudo pip install apache-airflow

airflow standalone

pip install apache-airflow-providers-amazon

*Books I recommend*

1. Grit: The Power of Passion and Perseverance amzn.to/3EZKSgb

2. Think and Grow Rich!: The Original Version, Restored and Revised: amzn.to/3Q2K68s

3. The Book on Rental Property Investing: How to Create Wealth With Intelligent Buy and Hold Real Estate Investing: amzn.to/3LLpXRy

4. How to Invest in Real Estate: The Ultimate Beginner's Guide to Getting Started: amzn.to/48RbuOb

5. Introducing Python: Modern Computing in Simple Packages amzn.to/3Q4driR

6. Python for Data Analysis: Data Wrangling with pandas, NumPy, and Jupyter 3rd Edition: amzn.to/3rGF73G

**************** USEFUL LINKS ****************

How to remotely SSH (connect) Visual Studio Code to AWS EC2: • How to remotely SSH (c...

Extract current weather data from Open Weather Map API using python on AWS EC2: • Extract current weathe...

How to send out email alert ON RETRY and ON FAILURE in Apache airflow | Airflow Tutorial • How to send out email ...

Monitor workflow with slack alert upon DAG failure | Airflow Tutorial • Monitor workflow with ...

How to build and automate a python ETL pipeline and slack alert with airflow | Airflow Tutorial • How to build and autom...

PostgreSQL Playlist: • Tutorial 1 - What is D...

Rapid API: rapidapi.com/hub

AWS Lambda function - Create your first Lambda Function | Lambda Function Tutorial for beginners • AWS Lambda function - ...

Github Repo: github.com/Yem...

airflow.apache...

airflow.apache...

airflow.apache...

airflow.apache...

Part 2: • Zillow Data Analytics ...

Part 3: • Zillow Data Analytics ...

Please don’t forget to LIKE, SHARE, COMMENT and SUBSCRIBE to our channel for more AWESOME videos.

DISCLAIMER: This video and description has affiliate links. This means when you buy through one of these links, we will receive a small commission and this is at no cost to you. This will help support us to continue making awesome and valuable contents for you.

#dataengineering #airflow

Awesome Tutorial

Your dedication to teaching end-to-end data engineering pipelines is truly inspiring. Your guidance has not only deepened my understanding of complex concepts but also empowered me to navigate the intricacies of building robust data pipelines. Thank you for your unwavering support and commitment to fostering knowledge in this dynamic field. Love from India 🚩

Being out of the office you learn all??

Thank you so much for your comment. It really means a lot to me.

BEST channel on youtube for learning about data engineering...thank you man

your content inspires me

Thanks so much for this comment. It really means a lot to me

thank you for the awesome tutorial!!! can't wait to start part 2.

just one correction. in the "commands used", please add "sudo apt install awscli" as well.

Thank you!

The best ETL video I have ever come across. Thank you sir ❤🔥❤🔥❤🔥💯💯

Glad you liked it!

This is just what I have been searching for, thank you good sir, please kindly post more videos, you are awesome

Thank you!

Bro, your explanation is really amazing. Nobody explain at that level. if possible can you start some videos on GCP cloud data engineering projects also. Thank you for great learning

Hey bro .... Did you complete this project on AWS , how much was the total cost or it was within the free tier limit

❤ good content

Awesome Brother, This is the Best channel i have ever seen in youtube to learn something real. Great work, Nobody can explain like you did, Thank you soo much, Lots of love for you. Keep doing this. Thanks a lott again.

Thanks so much for your comment. I really appreciate it, and it means a lot to me and motivates me to do more.

another good video from you. keep it going, keep it simple to the point and let it flow together end to end. just as you have been doing.

Thanks so much for your comment.

Amazing content! Thank you brother. Please do upload more such videos!!

Since I’m a mech engineer coding is almost like mandarin to me but u sir the Great explanation 🙏🏻🔥🫡🫡 really loved it n totally understood ❤❤

Glad to hear that.

Very explanatory!

Thank you!

Hi after airflow standalone i am getting error:

ModuleNotFoundError: No module named 'connexion.decorators.validation'

How do I fix this?

Amazing man..!

Thanks a lot!

This was awesome! Thank you for this resource.

Glad it was helpful!

We can also use the .env file for encryption of api key and use envloader

My ip address refuses to connect after i established port 8080. It showed airflow login and i put in credentials then show a refused to connect screen

Very helpful! Thank you so much :)

Thank you so much. I'm glad you find it helpful.

At 19 seconds, already liked and subscribed.

Awesome. Thanks so much. And thanks for finding our video valuable.

Your content is very useful!

Thanks so much. Your comment means a lot to us and I'm glad that you find our contents useful and valuable.

Great and explicative video guys!! Amazing!!!

Thanks so much! I'm glad you like it.

which app did you use to create the data pipeline visualization?

Thank you for the wonderful tutorial! It's been incredibly helpful, and I've already subscribed to your CZcams channel!

I have a question about the necessity of using EC2 in this project. Would it be possible to achieve the same results by simply installing Apache Airflow locally within a Python virtual environment? I followed your steps closely, but when I run a DAG with tasks to extract Zillow data via the Rapid API, the DAG seems to get stuck in the running state indefinitely without completing, and it doesn't generate any logs.

Interestingly, when I test the Rapid API locally in a plain Python file, it works perfectly fine. Additionally, when I create a DAG without making requests to the API, it also works without any issues. The problem only arises when the DAG task attempts to access Zillow data via the Rapid API.

I'm curious if this is why EC2 is used in the project. Any insights you could provide would be greatly appreciated! Thanks again for putting out great Data Engineering content!!

Pip install --upgrade awscli command not running in virtual environment

I am getting an error while executing apache standalone

TypeError: SqlAlchemySessionInterface.__init__() missing 6 required positional arguments: 'sequence', 'schema', 'bind_key', 'use_signer', 'permanent', and 'sid_length'

GOD BLESS YOU!❤

Amen! Thank you!

Hey, let say I do this end to end mapping myself how much will it cost me to use their services? Can I do this in free tier plus additional cost I may incur using ec2 instance that is not free like you mentioned?

i just wonder this data pipeline using Lambda for loading and transforming data instead of Glue spark jobs?

Excellent ...

Thank you!

can you Azure platform

Hi sir,airflow option is not visibile in the vs code interface even after installing it in the ubuntu instance

Hi, I'm facing the same issue. Were you able to resolve it?

Thanks for this great tutorial! Questions: Is it possible to use Cloud 9 as our IDE and from there access our EC2, or viceversa?

I believe you should be able to use it. Although I have not used it for my airflow project before. You will have to provision a cloud9 IDE and use it but you will have to pay for it except if there is a free-tier that you can use.

Hi,

The project is really good, got to learn so much.

I have an error while I am trying to transfer my file from ec2 to s3 bucket.

File "/usr/local/lib/python3.10/dist-packages/airflow/operators/bash.py", line 210, in execute

raise AirflowException(

airflow.exceptions.AirflowException: Bash command failed. The command returned a non-zero exit code 127.

I have checked my bash code, it is perfectly fine. My first dag, python operator is running and creating the file but when it comes to bash operator task, it is failing.

this happened with me too..what is the solution?

@@pranalidarekar_5852 I had a spelling mistake in my code, that's the reason why it was not running.

where exactly did you make the mistake

@@darshan9340

@@darshan9340 I have the same error. can you tell me where you were wrong?

Make one ETL project with Apache airflow without using any cloud

Why through? In your job, youll be expected to work with cloud.

@@akj3344 yes but cloud is expensive so to understand the technology doing on local machine I think its good then after know the tech doing experimentation with cloud

@@abduljaweed8131Let me take you through an overview of a project that you can do without using cloud:

First start by working with a CSV file. What you do is upload that file to an s3 bucket, and then load the data from the s3 bucket and basically transform it to parquet data type, and then write to another s3 bucket. After that you can use airflow to orchestrate the tasks.

Now instead of using s3, you can use minio. It's an open source tool that works exactly like s3. Infact, the airflow operators for s3 can be used on minio as well.

You can use pandas dataframe to do the transformation to parquet and write the file to the minio bucket. If you want to get a bit more fancy, you can use spark to do the same thing(it leverages the use of dataframes)

After working with a file, then you easily change the data source to api endpoint.

I can help if you want. Just ask if you need more clarification. I just gave an overview basically.

@@abduljaweed8131

Main reason why cloud is used among big companies because its cheap vs building your own data center

Also , aws and azure have free tier plans , enough for you to learn aswell

Thanks for the tutorial, I am trying to connect VSC with the same EC2 instance we created in this project but it showing that permission is denied due to public key. I followed each steps from your other video which is 'How to remotely SSH (connect) Visual Studio Code to AWS EC2'. Please help me with this. I have tired everything but showing me same issue. Iam using Macbook. Thankyou for your time!

May be you need to grant permission to the .pem file such as writing "chmod 400 path/to/filename". Another issue might also be the syntax in your config file. You need to make sure you write it the way it should with lower case where it supposed to be etc.

guys anybody can help with timestamps on the videos ? it will be really helpful

I am doing the project and putting it on github and linkedin when I finish...Thanks

Make video on AWS data analytics services project

Thank you!

Domain name pls

Airflow Standalone command getting stuck. not creating user and password . @tuplespectra, could you please help

Can you kill the server(CTR + C) and then restart it?

hey were you able to fix this error?

I also faced the same issue !

@@tuplespectra well this really helped , thanks :)

@@kartikeymishra2673 You are welcome.

@@tuplespectra Hey, i'm getting a typeerror and not getting stuck but not creating user and password either! Can you help please

Thank you so much for such and awesone tutorial. I wanted to run these codes and I am getting this error:

WARNING - Error when trying to pre-import module 'airflow.providers.amazon.aws.sensors.s3' found in /home/ubuntu/airflow/dags/zillowanalytics.py: No module named 'airflow.providers.amazon'

Please help!

@tuplespectra could you please help?

@@Nari_Nizar did you remember to do a "pip install apache-airflow-providers-amazon"?

@@tuplespectra it worked! Thank you very much, this is an excellent project!

@@Nari_Nizar Thanks. I'm glad it worked and you found the project valuable. Please help Like our videos and Share with your friends, team mates, colleagues so more people can benefit. Thanks so much.