RMSprop Optimizer Explained in Detail | Deep Learning

VloŇĺit

- ńćas pŇôid√°n 27. 07. 2024

- RMSprop Optimizer Explained in Detail. RMSprop Optimizer is a technique that reduces the time taken to train a model in Deep Learning.

The path of learning in mini-batch gradient descent is zig-zag, and not straight. Thus, some time gets wasted in moving in a zig-zag direction. RMSprop Optimizer increases the horizontal movement and reduced the vertical movement, thus making the zig-zag path straighter, and thus reducing the time taken to train the model.

The concept of RMSprop Optimizer is difficult to understand. Thus in this video, I have done my best to provide you with a detailed Explanation of the RMSprop Optimizer.

‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ

‚Ė∂ Momentum Optimizer in Deep Learning: ‚ÄĘ Momentum Optimizer in ...

‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ

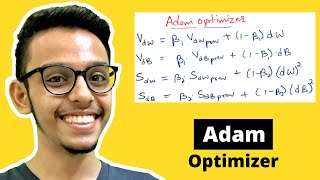

‚Ė∂ Watch Next Video on Adam Optimizer: ‚ÄĘ Adam Optimizer Explain...

‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ

‚úĒ Improving Neural Network Playlist: ‚ÄĘ Overfitting and Underf...

‚úĒ Complete Neural Network Playlist: ‚ÄĘ Neural Network python ...

‚úĒ Complete Logistic Regression Playlist: ‚ÄĘ Logistic Regression Ma...

‚úĒ Complete Linear Regression Playlist: www.youtube.com/watch?v=mlk0r...

‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ

Timestamp:

0:00 Agenda

1:42 RMSprop Optimizer Explained

5:37 End

‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ‚ěĖ

Subscribe to my channel, because I upload a new Machine Learning video every week: czcams.com/channels/JFA.html...

thankyou so much for uploading these videos, your explanations are easily understandable

Such clear explanation

how to initialize value of Sdw and Sdb?

Hello thanks for the info. But you didn't mention the purpose of the square for the gradient.

Hand's down,best explanation ever:)

Haha‚Ķ Thanks you so much ūüėĄ

What is (dw)^2?

What is S?

your channel is highly underrated, it deserves a lot more audience

Thank you for this considerate comment ūüėá

waiting for SVM since you explain so nicely..thnks

Thank you! I will upload SVM video after finishing RNN series

Hi Sir, any plan of uploading videos on support vector machines? If yes then, please try to cover the mathematical background of SVM as much as you can ...

Anyway your content is really appreciable...Thanks !

Thank you so much for your suggestion! Yes, I will be making video on SVM and covering mathematical details behind it.

You are the best, thanks dude ūü§ô

You‚Äôre welcome ūüėá

If situation with w and b would be opposite values of gradients on the vertical axis were small and values on horizontal axis where large would RMSprop slow down the training by making vertical axis values larger and horizontal axis values smaller?

No no… it will still make the training faster. Vertical horizontal is just an example i am giving. Realistically, it can be in any direction. In every direction, its gonna work the same way.

good

HiÔľĆIs it correct that you set the vertical coordinates to w and the horizontal coordinates to b? I think it should be the other way around.Because whether the goal can be reached in the end depends on w rather than b.

Hi… neither we set vertical to w nor b. Its just an example given… in a model.. there are many axis, not just x and y if we have more than 2 number of features. So a model can take any axis as any w or b. and it doesn’t matter as well which axis is for waht

@@CodingLane thanks!So in practice this is not going to be a 2D planar image but a multidimensional image?And which parameters can determine the point of convergence in gradient descent?W OR b?

So i guess what he means is that if you get a high gradient, you will be updated a lower amount and if you get a low gradient, you will be updated a higher amount.

You're incredible

Thank You Marc! Glad you found my videos valuable.

Explain ADMM also

Man and what kind of LOSS should I use when training using RMSprop optimizer?

You can use any loss function