Data Validation with Pyspark || Real Time Scenario

Vložit

- čas přidán 16. 12. 2023

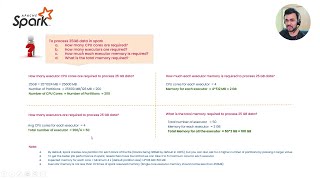

- In this video will discuss about , how we are going to perform data validation with pyspark Dynamically

Data Sources Link:

drive.google.com/drive/folder...

#pyspark #databricks #dataanalytics #data #dataengineering

Amazing content. Keep a playlist for Real time scenarios for Industry.

Very helpful! Thank you

Nice

Great explanation ❤.Keep upload more content on pyspark

Thank you, I will

I just started watching this playlist. I'm hoping to learn how to deal with schema-related issues in real time.Thanks

Thanks a million bro

Cool, but is it like this every time ? Like you have a reference df containing all columns and file name / path and you have to iterate over it to see if its matching ?

Yes

how did you define reference_df and control_df

we defined as a table in any DataBase. As of know i used them as a csv

while working with databricks we dont need to start a spark session right ?

No need brother, we can continue with out defining spark session, i just kept for practice