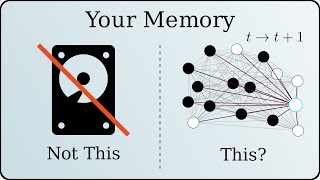

What are Neural Networks || How AIs think

Vložit

- čas přidán 14. 06. 2024

- Big thanks to Brilliant.org for supporting this channel check them out at www.brilliant.org/CodeBullet

check out Brandon Rohrers video here: • How Deep Neural Networ...

Become a patreon to support my future content as well as sneak peaks of whats to come.

/ codebullet

Check out my Discord server

/ discord

I came to learn, realised I'm not smart enough and stayed for the drawings.

If you like AI applied to games you might want to give my channel a check. Cheers!

Daporan self advertising on someone else’s channel isn’t cool, mate

I had no bad intentions, but I understand your view.

If you want to learn more about Machine Learning / AI You should give this playlist by Andrew NG a try. czcams.com/play/PLLssT5z_DsK-h9vYZkQkYNWcItqhlRJLN.html It's really great. I've found these exercises go well with the material: github.com/everpeace/ml-class-assignments/tree/master/downloads

I not smart enough so I turned the sound off and looked at the pictures 😉

me, a biologist, hearing him explain biology...yeah thats about right

Hey someone here in 2019

@@310garage6 ok boomer

@@aidanbrennan426 that is not how you use it.

Macaroon_Nuggets ok boomer

@@aidanbrennan426 knew you would do that

6:25 smooth transition there

So smooth I had to click your time stamp to realize there was a transition

By 2:29 seconds i fully understood the concept behind neural networks... I'm third year comp sci and never heard anybody explain this so perfectly. Thank you !! Very impressive !!!

CB: I will just run through this, you get it

Me: no I don’t

Yeah he sux ASS

@@monkeyrobotsinc.9875 That was not the point of the comment.

He's just saying you understand 1+1.

The input he drew on the bottom right is what he's using to compare to the images on the right. If they match up, it's a +1. If not it's a -1. Red lines= x1, blue lines= x-1.

This is Ben

"Hello, I'm Ben..."

"Hello Ben"

"...And I'm an anonymous neuron"

an*

I want Ben to appear in biology books, he's a very pretty neuron.

Isn't the neuron's name Ben, how is he anonymous?

The sound's too low in the fist part, it's making me neurotic... lol

Hello.

Neuroscientists are just brains trying to figure themselves out...

Holy shet. This is too real for me :D

Dats Deap

Esphaav trouth lol

Christopher Dibbs if you are being a dumb ass don’t worry you are just a meet bag with electricity going through it it is going to happen

Christopher Dibbs AAAAAAAAAAAAAAA

Honestly, I would watch any programming course taught by you in this style.

So if the brain is made up of 100 billion neurons, does that mean that if we had computers powerful enough to simulate evolution with creatures of 100 billion neurons, they could eventually become as intelligent as us?

Real brain has much more variety in neiron contacts than just 0 and 1. There are ingibitors, circles, and more and more... So we not even close to create computers which could support it. Its much easy to brootforce rar with password than doing so

And that is why people think that Artificial Intelligence might someday develop far enough to rival human beings' smarts.

@@ardnerusa You're right, but on the other hand technology is always advancing, just as if you told people a hundred years ago that it's possible to send a human to that one ball in the sky that goes from crescent to gibbous and back again, or if you told them that a computer could be smaller than your hand and be used to hear someone's voice instead of being a gigantic machine that's as big as two rooms (and can only do basic arithmetic lol) they wouldn't believe you.

Quantum computers can already create states that are _neither 0 nor 1_ which opens the possibility for neuron contacts and probabilities. It's already possible to mimic the action of inhibitors and circles, and perhaps in less than a century making a "are you human?" captcha will not even exist because there'll be no problem AI cannot solve.

However if by intelligence you mean emotional intelligence...no one can say. Honestly it boggles my mind to think that someone someday can type code which enables a computer to feel emotions like genuine frustration or excitement which isn't pre-programmed. But it may just happen, who knows.

This is exaclty why robots are so limited in their use. Not robots in general, but robots made to do a specific thing, are rubbish at anything else. Our brains, however, hold multiple connections that let us do many, many, many tasks. Combine that with personality, self awareness, intuition and creativity and you have a neutral network beyond anything we can make. At least, for now.

@@otaku-chan4888 Since evolution created human emotions, it seems likely that one day, when we have neural networks as complicated as our brains, they could learn emotions through evolution algorithms. But I agree that it's probably impossible that a person would be able to manually type code that equates to emotional intelligence.

For some reason I want "a bullet of code" to be a code term.

It is a bullet of code is just semen shooting out of a shaft

WHIT3B0OY thanks, I hate it

@@georgerebreev Outstanding Move

Maybe for read streams and write streams, what you send int a stream can be called a bullet of code, since you *sorta* shoot it

@@thefreeze6023 a bullet of code is the "scientific" term of having your code break so spectacularly that you just snap, grab a gun and end it.

brain.exe has stopped working

Xaracen it can... under the right circumstances

"So red is positive and blue is negative"

*My life is a lie*

The only thing I heard is "mutatedembabies"

And that’s all you need to know...

This is the most helpful video I have seen. The other videos don’t really get into detail of how they work.

I know this video has far less views than some of your other videos, but I'm loving it. Please keep up this tutorial style video and don't be discoruaged. I really appreciate it!

I love your videos, and truly enjoy watching them.

I appreciate the time & effort you put into making them, and would love to see more videos like this where you teach others your vast knowledge & skills

I’m barely able to make a video a month, so I totally understand the slog.

Just thought I’d let you know that I think you’re doing an awesome job. I’ve been a teacher for over a decade, and just want to extend a helping hand if you ever need help in teaching/making educational videos.

Congrats on 100K this channel is really growing fast!

yeees Im so looking forward to your next video! Pls keep it up!

These videos are great! Do you plan to do a ANN implementation/coding example, like before? I personally would find that really valuable. Also, any suggestions on practical Neural Network learning resources?

I would like the video connecting neural networks to genetical ones aswell as a code video. Great stuff man.

I'm having an AI test in 6 hours

thank you Code Bullet

Wow, you explained it so I could understand it. Great job!

Great NN video and thank you CB!

I really enjoy learning from your videos

Great stuff! Thanks! 👍🤓

Fantastic man. Your videos are great...

I love this, and I'd love to see a video on how to actually combine NNs and the genetic algorithm! Keep up the awesome work :D

Thanks! You are really inspiring :D

Funnily enough, in class I am learning how neural networking works, and this video has been quite useful on helping me understand it better.

Thanks for the video, bullet!

Wow, very great and simple video!

wtf your subs doubled since like 3 days ago nice mate

benny boy whaha yea I am one of them, watched one video. Then the offline Google thingy one popped up in recommendations. And then I subscribed ^^ interesting stuff.

ye its good. nice :3

I think you'll like my channel then. I've got AI demo's and will be doing more tutorials soon. Let me how you liked it, cheers!

If you like this channel, then you might want to give my channel a check. It's focused around AI. More content and tutorials are coming.

Daporan I'll check it out. I don't normally like advertising on other videos, but you've been nice enough.

I would greatly appreciate the followup video you mentioned about the connection of genetic weight evolution with neural networks

Nice explanation! In future videos it might be a good idea to invest more time in volume balancing though 😂 that one talking section in the middle and the outro music I just got absolutely blasted lol

Amazing as always!

Cool! For my CS final project this year I'm doing a basic neural network for simple games (snake, pong, breakout)

Good luck!

Good luck bro, it's a lot of fun. Check my channel if you need some inspiration for fun games to do AI on.

I looked away for like 2 seconds and suddenly I didn't understand and had to rewind. I love the drawings

10:26 wait... no way that you freehand drew that

love ur vids man more plz

Very informative thanks for the video

Very interesting and I'm still waiting for part 2...

This was surprising easy to follow

Please do the video combining genetic algorithm and neural networks. This is great!

Can you please upload your Dino code to your github

please teach me this amazing AI. I'm waiting for more. Great Job!

Came here for the knowledge, subbed for the humor

Where’s the next part of this series dude? I. Need. It. I need it!

Hey CodeBullet. I’m an upcoming senior in HS and over the past year I’ve found a passion for coding. I’ve been trying to get into ai and ML for the past few months but with no luck. Could you go more into depth with this specific neural network?

Hi, I'm trying to implement NEAT in java too, but I'm having problems with speciation, my species is dying very fast, and my algorithm could not solve a simple XOR problem, if you make a video explaining some details about NEAT would be pretty cool, and maybe I can find what I'm doing wrong in my code, I've been able to do several projects using FFNN, but NEAT seems to be much better at finding solutions, especially when you do not know how many layers or neurons you need to complete the task.

(I'm Brazilian and I'm going to start the computer sciences course soon, your videos are very good, keep bringing more quality content to youtube and sorry for any spelling mistakes)

Damn this channel exploded recently!

I am seeing neural networks rn in Python classes in college, this was very helpfull

I’m watching this and revising science at the same time, cheers mate

Great job!!!

6:30 and onwards reminded me so much of tecmath's channel... Like holy shit xD

Amazinh vid!!!plz more

@Code Bullet

You could use an Info Card to take people to the Video/Channel you mentioned at 5:00

Thank you Ben i got a four in biology class yesterday.

Hey, would be great to have this final tutorial example thing combining neural network and genetic algorithms ^^

Waiting for more vids

Hi Code Bullet, I have a question about the activation function.

There are a lot of activation function, however my teacher said that the best one is Sigmoid (or tanh). But why? And is it really the best, just because it's continuous function? if it is, then can we design our own activation function and actually work well? I know that in CNN they use ReLU instead of Sigmoid. Then what happen if we use Sigmoid on CNN, or even our own activation function?

My teacher never answer question seriously, and they just said that it works better when you actually try it. But it still doesn't answer WHY it is the best. It might be better compared to the non-continuous step function, but is it better than all of the activation function? And also, why in my book there's only Sigmoid (or tanh) that is continuous as an activation function!

I think this topic will be an interesting tutorial video. Thank you.

The reason we use activation functions is to introduce non-linearity into the nn model. Otherwise we can achieve the same thing with a single matrix multiplication. With more layers and non-linear activation functions, the model becomes a non-linear function aproximater.

The reason we like to use continuous functions like Sigmoid, Tanh and Relu is because they are easily differentiatable. There is a supervised learning technique called gradient-decent through backpropagation which is used in many tasks instead of Genetic Algorithms. However gradient-decent requires computing the gradient of the weights with respect to the "cost" function (fitness function if you want to think of it that way) of the network. This is a massive chain rule problem and since the step-function in this case has a gradient of 0 as it is not continuous, all of the calculations become 0 making it impossible to use backpropagation.

I advise you look up backpropagation and how it works. 3Blue1Brown has an awesome video about it:

czcams.com/video/IHZwWFHWa-w/video.html

Also, Sigmoid is deemed weaker than Tanh and Relu these days. Lots of Tanh and Relu models are dominating with Relu coming out on top. (ofc it really depends on the model's context)

KPkiller1671 but still if we're just talking about differentiable, there might be other activation function that is harder to differentiate but works better. Easy doesn't mean the best.

About the polar value that is mentioned by NameName, I never heard about that. I think I'll look for it.

NameName ah ic

I'm not sure if sigmoid/tanh is the best way to go all of the time, ReLU for example reduces gradient vanishing and enforces sparsity (if below 0, then activation = 0, which translates into less activations, which tends to be good for reducing over-fitting).

The why one is better or worse than the other in different cases can hardly ever be found analytically, though.

Max Haibara you will find in machine learning, if something is faster to differentiate your model can be learned faster. Also relu has a neat advantage over all other activation functions.

You can construct residual blocks for deeper networks. If the network has too many layers, an identity layer would be needed somewhere. Relu allows the network to do this easily by just seeing the weight matrix of that layer to 0s.

A coded example would be nice. I like your videos

Not studying computer science or anything but this was very interesting! You say stuff like weights and network all the time in your videos so this helps explain how.

Gist is layer 1 is input of what you want and is assigned a value. Layer 2 is calculating the layer 1+ neural connections + B. Layer 3 is calculating layer 2 + neural connections + B. These calculations always lead to the correct output because it checks to see if value is more than/ equal or less than/equal to 1. So what are the numbers that will always give you the correct output? That is what the AI is going to decide on after lots of trial and error I'm assuming and it probably always starts with a random value. The neural connections are weights which also the AI decides. So AI evolving is just them guessing the right numbers. Pretty simple.

You should definitely do a coded example of this, even if it's 4 years late...

Hey, Just a random question.

Why did you need to check 4 times on the second level?

Couldn't the ones that check for black just return a negative to the third layer instead of having the two yellow ones?

the 2nd row and 4th row in the 3rd layer can just connect to both of the neurons in the 3rd layer, but with a blue one to the ones they're not connected to currently

will that not work?

Edit: I realize that it won't work with the current numbers because if you have 3 blacks and 1 white for example, it will have +2 and -1 and still be positive, but what if we make the negative one much bigger? for example, positive adds 1 and negative just makes it negative. so if there's at least one negative, send 0 no matter what, if all positive, send 1.

Also, why does the second level send 0? if it send -1 instead, wouldn't the bias just be redundant?

Is this a valid neural network? prnt.sc/10b6pbo

Did I make a mistake here?

is it not allowed to have blue ones on the second layer?

is it not allowed to have numbers other than -1

"...And I will probably make a video about combining neural networks and the genetic algorithm sometime in the future"

...

Wish that happened, I miss these educational tutorial videos. The new ones are fun though, love your work!

Thank you Code Bullet, I am A 10 Year old PCAP and i am trying to learn AI or more specificly DL everywhere I search i don't understand anything but I understood when you told me, and again thankyou

A neuron doesn't sum up the inputs then uses the activation function, but to the inputs it adds a "bias".

Output = H(b+Si)

where H is the activation function, b the bias and Si the sum of hte inputs.

I really think you should ammend your title. I believe as it stands, a lot of new comers to neurual nets are going to think that Genetic Algorithms are the be all and end all of training a neural network. I got caught up in this mess myself before discovering the world of gradient decent (and other optimization techniques) and backpropagation. Of course supervised learning techniques contain a lot more maths and are a fair amount more complex, but I don't think people should be told that this is difinitively how all neural networks work.

This video taught me more about neurons than I learned I school

Id like to more of this applied

I didn’t understand the “oversimplified” explanation.

Imagine neurosciencists

With the checkerboard pattern, you can do the exact same thing with 1 XOR gate, 2 XNOR gates and 2 AND gates

"Ah man this is confusing"😂

I've gotten so many super long ads for programming courses. CodeB's getting serious audience targeted ads, I hope they're paying out well. Also def not skipping cuz they hit the nail on the head on this one xd

Did you draw this yourself, well that's something.

0:50 Reminds me i have yet to learn Nervous System for exams

Came for no reason, stayed because big funny and smart

Very helpful:)

kewl character ya drew

That perfect checkmark

Funny how I knew literally every single thing in more detail because well... I have a computer science Masters degree. But I still stayed for the entertainment you provide because yes baby

3blue 1 brown also made a great video about neural networks definitely worth seeing

Code Bullet, Ben's mother cares about his soma!

Seriously though, I was thinking, and I think there is a reason why all of your asteroids playing AIs devolve into spinning and moving. The problem that causes this is your input mechanism. It is too simple.

Stop me if I'm wrong, but you explained your input mechanism as having the following inputs: Whether or not there is an asteroid (possibly distance) in each of 8 directions around the ship, ship facing, and ship speed. I do not remember if you give it ship position at all, but that is irrelevant in my opinion.

The problem with this setup comes from the fact that to the AI, asteroids seem to vanish from moment to moment as they pass between the directions if they can fit between 2 rays comfortably. As such, the AI really isn't tracking asteroids from moment to moment.

My solution is, what if you fed the AI distance, direction, and heading of each of the closest 8 asteroids in order from farthest to closest. This will allow the AI to have some object persistence, as well as actual tracking for the objects. Likely the AIs will be able to develop more complex strategies as a result.

The overhead of such an approach is that you have about 3x the number of inputs, and while it's a linear increase in the number of inputs, it may result in an exponential increase in the number of neurons. However, a good GPU will likely be able to handle this.

I would be interested in how this affects the AIs you bred, and whether or not they develop more intelligent techniques with the information they would be given.

hello I am new to this channel checked Brilliant already started learning ANN but I wonder how to actually program with it what program do you use how do I learn to code it and such thank you in advance

So how exactly are you using evolution combined with the neural networks? btw this was a great explanation video on explaining the neural networks. I understood the basic concept, but didn't understand how the weights correlated to actual math.

#ask will you make tutorials on how to code ai's

and how did you started making them

i dont see good turorials on how to code them

Here’s an idea, what about putting an AI against a Rhythm game like guitar hero/clone hero or any Rhythm game like necrodancer. Could you possibly make an AI to complete a game?

For anyone confused with the math at 8:00, he did not include his first step of creating the numbers in the far left column. The far left numbers change based on what input is used. I suppose this could be assumed, but it took me a second to realize it.

Question: When mutating the candidates in a population, how many mutations do you give to each candidate?

You usually don't dish out an amount of mutations. Quite often you will have a mutation rate of about 1%, however it is not set in stone. Another popular aproach is to mutate at a rate of 1/successRate. Hope this helps :)

Can you please do a video on how to acually code/create those neural networks? Thats the part im struggeling with.

At 9:59 when you re-added the bias neuron wouldn't you subtract 1 from the second-row neurons too or am I just confused.

Can someone explain where at 6:46 he got the 3rd -1 even though there are only two boxes? I understand how he got -1 it’s just confusing me why there’s three

So, if you have a neuron that you want to require 3 positive connections for it to activate, then you would make the bias -2 right?

U hurt my head🤕

@@310garage6 haha, oops

6:30 / 11:55 RIP headphones... :(

Maybe run your audio clips through a filter like ReplayGain that tries to make sure the audio has about the same overall volume before editing all that stuff together?

3:35 Best part of the video

This is pretty simple

For those who are a bit lost think of it as a filter or a yes/no gates you have to pass through

Even thou i didn't found anything usefull for me, it was a great video! Just try to get your audio little bit more equal through the video next time

Sorry if I sound really dumb for saying this but wasn't the bias supposed to always give out a 1? If so why does every equation end with -1 when the bias is factored in?

Keep posting on AI .I want to learn AI coding and you have the natural teaching abilities.

So uhh if I want it to have a specific function or command, how am I supposed to get this down? I'm trying to have mine be capable of encryption but I'm not sure how I get that in.