Faster than Rust and C++: the PERFECT hash table

Vložit

- čas přidán 20. 05. 2024

- I had a week of fun designing and optimizing a perfect hash table. In this video, I take you through the journey of making a hash table 10 times faster and share performance tips along the way.

00:00 why are hash tables important?

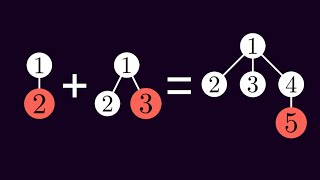

00:31 how hash tables work

02:40 a naïve hash table

04:35 custom hash function

08:52 perfect hash tables

12:03 my perfect hash table

14:20 beating gperf

17:24 beating memcmp

21:46 beating SIMD

26:01 even faster?

30:06 pop quiz answers

31:45 beating cmov

33:09 closing thoughts

Thanks:

Dave Churchill: / davechurchill

My Twitch chat: / strager

Jennipuff: / jenipuff

Attribution:

Thumbnail artwork by Jennipuff

JavaScript logo by Christopher Williams under MIT license

PHP logo copyright Colin Viebrock, Creative Commons Attribution-Share Alike 4.0 International

Ruby logo copyright © 2006, Yukihiro Matsumoto, Creative Commons Attribution-ShareAlike 2.5 - Věda a technologie

Click 'read more' for performance tips with timestamps 👇

Source code of all of the techniques (for educational purposes) (warning: poorly organized):

github.com/strager/perfect-hash-tables

Production C++ implementation of my hash table:

github.com/quick-lint/quick-lint-js/blob/9388f93ddfeca54f73fab398320fdcb8b3c76a66/src/quick-lint-js/fe/lex-keyword-generated.cpp

My JavaScript/TypeScript compiler project:

quick-lint-js.com/

Rust reimplementation of my hash table:

github.com/YangchenYe323/keyword-match-experiment

Performance tips:

04:16 1. Try it

08:18 2. Make assumptions about your data

16:24 3. See how other people did it

20:08 4. Improve your data

20:28 5. Keep asking questions

21:17 6. Smaller isn't always better

24:57 7. Use multiple profiling tools

25:13 8. Benchmark with real data

29:46 9. Keep trying new ideas

32:42 10. Talk about your optimizations

Please fix this 😁

Fix what? What's broken?

@@strager_ Fix your comment on top😁

What is broken about it?

Is this meant to be a pinned comment? This shows up as a normal comment to me.

Extreme optimization can be an addicting game. Very cool to see it documented.

you could have typed that faster ;)

My colleagues get mad if I do it, but it's so much fun.

@@voidwalker7774 u coudof usd fewer wrds:)

@@user-dh8oi2mk4f ucudvusdfwrltrz

@@Yutaro-Yoshii ur nm cdv usd smplr wrtng sstm

I can't believe I watched a 34 minute video on hashing without getting bored and wandering off, this was an amazingly interesting talk.

He optimized his presentation, too. :)

It is because this wasn't a tryon haul. 😂

@@bittikettu The first rule of try on hauls is: you do not talk about try on hauls.

wait what? I just realized the video was 30+ minutes long because of this comment o.O

The video was only 17 minutes long, but I watch at 2x speed ;)

This video was incredibly easy to watch, you had my attention the whole time. I really hope the algorithm blesses you, because you deserve it.

Thank you!

Just appeared in my recommended, seems like a good sign!

@@strager_ honestly the thumbnail is what grabbed my attention lol

I’m with Burrak on this one - such a pleasant video to stay engaged with.

Please do more videos.

I think it’s happening!

This guy is every programmer from each generation wrapped into one. I love this dude.

👶👦👨🧔♂️👨🦳👴

He is the 1985 model of Dennis Ritchie

Not every generation.. unless he’s wearing programming socks 🥹

@@Luuncho 😁 Maybe that's why he's never shown us his toes.

P.S. No socks, but I have an Intel 4004 t-shirt.

@@Luunchocreepy.

Knowing your data is such a HUGE programming concept that isn’t talked about nearly as much as it should be.

Its because CS education has been simplified to the point of stupidity and dogma - they are very big on commenting your code, right? - originally it was the variables and data flow that were asked to be documented and that specifically the code should _not_ be commented - it was understood that the code should merely be written in a way that documents itself (easy to read) because the code is the authority on what the code does - but somewhere between the 1980s and now, all we get is dogmatic crap from "expert programmers" that still write code with comments like "// increment the index" and "// test for null" because its really easy for a CS professor to grade junk like that, while its problematic to grade people on variable and function names and types, the actual stuff that needs to be very clear.

Can you share an example of an expert programmer who writes comments like "// increment the index"?

@@styleisaweapon Wow, someone's really butthurt about comments out of all things.

While i do agree that the "Clean Code" principles do more harm than good ( czcams.com/video/tD5NrevFtbU/video.html ) i do strongly disagree with comments being absolutely needless. I leave tons of comments in my code. If you look at the code i write it's essentially 50% comments. Why? Because i like to be nice to me and to any potential person who looks at my code because i write code once and then immediately forget what it does and why it's there. Sure i could try to understand it's purpose but you know what's easier for a human to read than source code? Human language. It's far easier for me to read a comment than it is to decipher a chunk of code with 0 comments explaining what it does. And that's how i'd like to think others experience it as well. And why should i not make my own life and the life of others easier by adding in comments? It doesn't hurt anyone and is a nice guidance for those who don't know as much as i do about programming.

@@knight_kazul you arent a good programmer if your implementation needs a second companion description of what the code does - meanwhile all that data you still havent documented while insisting you need to document the code - thats your problem you've been trold cant help you further

@@styleisaweapon My code doesn't NEED comments. i provide it because i want to. Because i can benefit from it later on in development when i have long forgotten about that code segment, what it does and why it does things the way it does.

Not sure what you mean by the data that still isn't documented...

The 'c' in _mm_cmpestrc is because it returns the carry flag. What the carry flag actually means depends on the control byte.

fun fact, the "easiest hash function being the length" is actually why PHP has so many weirdly long function names. in early versions that's actually how the hashmap for globals would work, and the developers thought it was easier (at least early one) to just come up with lots of different length names, than replace the underlying structure (which they did later anyway, but at that point didn't want to rename everything)

I totally forgot about that! I would have mentioned it in the video. 😀

Wow. That's... horrible.

@@styleisaweapon i mean, is there really a better option though? a _general_ purpose hashmap that is meant to be "usually fast" on 64bit systems can't really be any faster than "single 64bit integer compare" can it? *without* any application specific assumptions, that is. and also, yeah, as long as its source was 64bit there can't be any collisions (not sure what you mean by "see how hash has multiple meanings", as in "do you mean the hash function or any specific hash", but it doesn't matter either way, for either interpretation of 'meanings'. either "the identity function" which is as well-defined as you can get; or "maps things only onto itself", which, again, can't possibly collide).

i mean yeah sure with e.g.

@@nonchip spoken like someone that doesnt know what properties algorithms need from their hashing function, just like those terribly wrong math nerds

that's incredible lmao

You would not really feel this is a 33 minutes video. The brief discussion in earlier sections made it easy to digest the things you talked in the upcoming sections. It's hard not to imagine you were rubber ducking while making the video because of how you explain is so relatable.

I've spent years teaching people on my Twitch stream (twitch.tv/strager), so I've had practice. 🙂

@@strager_ wow youtube thinks parenthesis are part of a regular twitch url

clicked for the thumbnail, stayed for hash tables

24:38 - I nearly lost it when you showed off those numbers. It's incredible to see what someone with in-depth knowledge can achieve. Appreciate the demonstration and advice!

I was also surprised how much cmov improved performance, especially after seeing Linus Torvalds' 2007 email about cmov: yarchive.net/comp/linux/cmov.html

@@strager_ I think something that has changed since Linus' comments is that A) the throughput difference of CMOV and MOV has been reduced as pipelining has been improved.

B) The penalty of a full branch prediction miss is greater because correct prediction is faster relative to incorrect prediction compared to 2007, and pipelining everything with cmov may still be slower than 100% perfect prediction but slower than a small number of mispredictions.

These are guesses, but yeah - as I saw you comment in another comment-thread as well; The environment can change and micro-architectures have changed a lot since 2007

@@casperes0912 On the most modern cpus there will never be a branch misprediction penalty in forward conditional code like that - modern pipelines will speculate both sides of the condition - the penalty you get is instead in always using unnecessary resources which may have otherwise done something else that was useful - the best version was the lea instruction, and its objectively strictly better in every way than both cmov and the forward conditional branching - the lea instruction uses the very highly optimized addressing mode logic built into the cpu - there exists no instruction on x86/AMD64 with any objectively better performance metric or available execution units - whenever lea can do it, you probably should have used lea.

@@casperes0912 *but _faster_ than a small number of mispredictions

This guy clearly has a knack for teaching. I have not seen an explanation this clear and engaging in a long time.

It’s a privilege to watch an expert talk on his field

"Your data is never random"

I just want to say Bravo !

What a pleasure to watch a Data structure video from a real-world perspective!

Subscribe.

This is not real world. This is "I have way too much spare time and I don't dare to go out" world.

This is a level of optimization that's never needed in the real world.

@@jankrynickyHow do you know that some program doesn't use hash tables a bunch to the point where this type of optimization _would_ help? You don't. You might not need that kind of optimization, but someone else might.

Smaller memory footprint *does* matter for performance, because caches. In this example, the table is just too small already to see that effect.

It's not just the cache hits and cache misses ratio, nor just the branch predictors at length, but also data alignment and page boundaries can come into play. Other things that can become a factor can come from the OS of the system itself such as with their process schedulers, v-tables, and more... but the latter are for the most part out of the hands of the basic user because this would then involve modifying the actual underlying kernels of the system and how they manage system memory access via read/write ops.

Small enough to fit on a CPU L-cache ?

This video was truly amazing. Wow. I really appreciate all of the work you've put into this. I've always known that I could write my own assembly, but I've figured that the compiler would always optimize better than I could. Seeing how you were able to profile and optimize your code made me realize that I would have eventually come to some of the same conclusions - so I should probably at least give it a shot myself.

Thank you for making this video. This should be a required viewing for any computer science college students at some point in their education.

PS. I was slightly annoyed that you didn't dig into why the binary search was slower at the very beginning of the video until you kept going deeper and deeper into the different inner workings of all of these different hash algorithms and optimizations, making me realize that this is meant to be a densely-packed hash table video, not a binary search video. Thanks again.

> this is meant to be a densely-packed hash table video, not a binary search video.

Actually, it's a video on general performance tips. The perfect hash table is just a case study!

> I was slightly annoyed that you didn't dig into why the binary search was slower at the very beginning of the video

You might be interested in this talk: czcams.com/video/1RIPMQQRBWk/video.html

This kinda reminds me how I optimized a hashtable with >2M entries into 2 arrays and increased its performance 20x. It was a performance critical program which was already using 32 GB of RAM (and I reduced to 8GB!).

The other thing that I think makes calling memcmp slow is that the branch predictor gets thrown off inside memcmp because memcmp is called from other places in the program with other data; with the single character check the branch predictor has the luxury of only seeing that branch used for the keyword lookup and nothing else.

I haven't looked in a while, but I thought the only branch in memcmp was a page boundary check. You might be right though.

Glorious thumbnail

Amazing video, hash tables are such an underrated concept, everyone uses them but few ever mention them or how they work, let alone do that in depth, you're awesome!

Hash tables are dope. 🚬

There's a talk by Raymond Hettinger on dictionaries, with an in depth look at how they've changed and improved since the 60s, and eventually returned to a very similar layout to that of the 60s. I think watching that is why CZcams suggested this video to me!

wtf? 😮 How they works everyone study from beginning. Both self-educated or university-educated, it is base knowledge in any book.

That was insane. I've never seen someone walk through such a deep optimization. Thanks so much for this video!

The fact that nobody is talking about the thumbnail is a crime.

This is some high-quality content. Thanks for taking the time to explain it so clearly

Excellent video! I have absolutely no use for this in my day job but I still had a lot of fun learning about it regardless. Can’t wait to see more videos from you in the future!

This video has been popping up in my suggestions for the past two months and I'm so glad I've finally watched it. I can see how these concepts still apply, even if you don't end up going down the rabbit hole and writing assembly instructions. Thanks a lot!

First video of yours I've seen and it's an instant subscribe. Highly technical information presented in a very clear and understandable way, with no filler, and plenty of food for thought. Thank you.

Phenomenal video! And thanks for talking about your missteps, that’s rarely talked about in videos like these. You kept viewers expectations of the optimization process much more realistic. Keep it up!

This was excellent! I spent a solid month on hashing at one point -- the tips in here would have saved me days of time. Thank you for the knowledge.

This is amazing! I never expected you'd be able to get more than 10x faster than builtin hashes. I didn't expect how wildly the performance would vary from modifying your algorithm, assumptions about the input data and assembly instruction optimisations. Seeing the numbers go up and up with your thorough explanations of each modification you made tickled my brain. I'll definitely be coming back here for more!

It is not all purpose algorithm. No need to compare them to built in

Most people would use the language-provided hash table, so it's completely fair to compare my solution with those hash tables.

@@strager_ No. That doesn't mean it is a fair comparison at all 🤦🏼♂️.

Its like comparing a Ferrari to a Fiat to get from point A to B, the fiat does the job at a fraction of the cost yes, but that comparison misses the entire point of the existence of the Ferrari.

Just because "most" people would use for convenience the builtin hash table doesn't mean it is comparable, in fact if you think it like that your point is totally self defeating, most people use the builtin because it is easier and more convenient, one solution seeks convenience and generality and the other pointless optimization for a single case, not comparable.

@@marcossidoruk8033 You can compare the two in terms of feature set, ergonomics, performance, and security.

I don't see the problem with comparing them. It's solves the same problem as other tools just in a way that's fastest for this use case. It's not general purpose like other tools sure but they are still different solutions to the same problem of storing data and fetching it from a large group with low overhead. The structure of one is just more coupled to the the data. It's an improvement in speed over what may have otherwise been used. Sure it's not a drop-in replacement for every project but it doesn't need to be. I'm sure it has it's tradeoffs too but what was being measured and compared here was the amount of lookups in a given time. Sure it may be an unnecessary optimisation and it won't be useful in every use case but that does not make the performance comparison in this use case invalid. The topic of this video was optimising the hashset data structure for use in his compiler. In terms of speed in his specific use case under conditions he expects, his solution was faster. Does that mean everyone should use it? No. Does it mean it's perfect for every situation? Does it mean it's always faster for every dataset? No. But that's not the topic of the video. It was not a pros and cons list for using his hashset in any program. It's how fast can he get with the data he needs to process en masse.

This simply amazing! From brainstorming to presentation and everything in-between. Thanks for sharing your hashing journey.

Now this is a quality content! Thanks a lot for sharing your knowledge with us!

This format is excellent. I learned a lot thank you.

Wow, great talk! You're a clever programmer and a good teacher.

We shouldn't, as a habit, go about trying to optimize everything to the last bit, but you cover exactly the way one should go about it properly: profile the performance! That goes for our larger programs as a whole, too. That is, don't go optimizing your hash function or writing your own hash table until you've determined that's where your software needs optimizing. Then when you do, iterate on that process: profile it and work inward. And of course, always check to see if there's a faster, well-tested option already available before rolling your own!

> That is, don't go optimizing your hash function or writing your own hash table until you've determined that's where your software needs optimizing .

Profiling first can be misleading. The keyword recognition code in my parser didn't show up in the profiler, yet optimizing it improved performance by 5%. (Maybe this is just a bug in the profiler?)

> And of course, always check to see if there's a faster, well-tested option already available before rolling your own!

I suggest understanding the problem well (e.g. by writing your own implementation) before picking an off-the-shelf solution. How do you know that their solution is good until you've thought about it carefully yourself?

@@strager_ By testing, which you'd have to do with your own solution, anyway. Though in many cases, lots of data on performance is already available unless you have a very special use case.

In production code, there are so many good reasons to avoid writing complex libraries until you can prove they don't meet your needs that not doing so is a named anti-pattern: Not Invented Here. Much has been written about it better than I could do here.

You're not required to agree with me, of course. I'd want a guy like you working on core libraries, anyway, and in those cases, your attitude is usually the right one. But I wouldn't want the guys working on the rest of the system trying to outsmart/out-optimize the core library team, at least not unless they've found the bottleneck and submit a pull request after!

> in many cases, lots of data on performance is already available unless you have a very special use case.

I disagree. The assumptions I can make about my data are not the same as the assumptions you can make about your data, even if we both could use a common solution (like a string->number hash table).

> I wouldn't want the guys working on the rest of the system trying to outsmart/out-optimize the core library team

Why not? Why can't anyone contribute to the core library? A workplace where only privileged programmers can touch the core library sounds like a toxic workplace.

@@strager_ You left out the second half of that sentence where I said, "unless they've identified the bottleneck and submit a pull request after". The point being if they're off rolling their own brand new solution everything because they think they "can do better" (a common attitude, and the one that leads to "Not Invented Here" syndrome), that's a problem.

It seems like you're just looking for something to argue about. My original comment was positive and supportive, and you still looked for ways to pick it apart.

Great video, fabulous experiment and results. I loved your diving into the assembly level.

Instant subscription. This type of content is why I still love CZcams. You packed years or even decades of experience into a short and densely packed lesson that I'll remember probably for the rest of my life. Thanks so much!

THIS IS SO HELPFUL. Thanks. This really helps us self-taught programmers like crazy. It's always good to learn about fundamentals like this, that are useful for many languages. Kind of opens your mind to what is possible.

Customizing / designing hash-table interfaces based on contextual knowledge about the underlying data is a great topic. Very true that such an intuitive technology (mapping some key to some element) is often overlooked and not well understood.

Awesome video!

This is one of the best videos about performance and low level programming I have ever seen. Usually trying to understand these concepts takes a lot of effort and I sometimes feel tired after a while. You managed to make this video easy to follow, interesting and entertaining. Great stuff.

The problem with doing all this is that possibly with the next version of the compiler, different operating system, or a new model of CPU, any of these optimizations might backfire (actually cause performance loss).

Yup. Maybe Zen 4's cmpestrc isn't so slow!

But I doubt the new hardware and compilers would make my solution slower than C++'s std::unordered_map.

If a compiler makes my code slower on the same hardware, that's probably a fixable compiler bug.

You bring up a good point though. If I *really* cared about the performance, I should keep my benchmark suite around for the future to check my work when the environment changes.

Just having the compiler put the basic blocks in a different order or just having the linker out the sections in a different order can change the performance. Different layouts of the stack and heap can do the same.

Emery Berger has a great talk about it + he co-wrote a paper “STABILIZER: Statistically Sound Performance Evaluation” about it.

The compiler might slow it down a bit, but unless there's a compiler bug you're never going to lose the order of magnitude in performance that you gained. And more importantly, you should build for your target platform, not some theoretical future platform.

@@criticalhits7744 there is no target platform, people will be compiling and running the code on hundreds of different processor models, maybe even different compilers (GCC, clang, ICC). Next, I am not arguing all optimizations would go bad, just some. For example, in this video, he has made 10 optimizations that were all beneficial. In some other future scenario, 7 might still be beneficial, 1 becomes neutral, and 2 harmful (worsening performance). You have to countuously keep testing each in isolation

@@ZelenoJabko Sure, that's kind of what I mean though, youll always want to benchmark at least on the compiler *you* are shipping with, assuming you're shipping software and not an SDK/API. If you're shipping software you'll always want to know what your range of platforms/environment s are now, and not necessarily consider what *might* change for the platforms/environments in the future.

This was a great video, I loved the content and it was really concise while still keeping enough information!

I am so happy I discovered this channel. This is such an awesome video, I really learned a lot, Thank you!

THIS VIDEO IS INCREDIBLE! Thank you so much for making it! Learned a lot of new things. Good luck in your new work

This was just amazing, please continue the great work!

loved the intuitive explanations!

and very solid performance advice. people often forget that you can adjust your input data to, for example, get rid of bounds checks, vectorize, or unroll.

> people often forget that you can adjust your input data

Yup. This is one problem with abstractions: they hide details! Sometimes it's nice to peel back the abstractions to see what you're *really* dealing with.

Wow! this was the perfect summary of all your work on stream, easy to follow and really clear and had me to question a few things about my code. I appreciate your hard work Strager, let's celebrate with a cold mexican coca cola. Cheers!

incredible video! i have never really cared about hashes, but i was completely invested the whole time! cant wait for more videos like this one.

Thanks for the profiler tips and a very enjoyable exploration of the optimization game. I want to go work on my hash function now! I love that hash seed search trick!

31:00 on the optimization of checking the first character. Calling a function has some overhead, just to push registers, jump to the address, clear some stack space, pop the registers again when it‘s done, and so forth. Also memcmp() in particular does what it can to do wider compares, which is almost always a big win, but since the data isn‘t necessarily aligned for that, it does some byte-for-byte compares at the beginning and the end of the memory region - the wide compares tend to be in the middle. The one-byte comparison for the first character is a single instruction, so when the first character differs, all of that overhead can be avoided. It‘s a win when the first character differs most of the time, less so if they‘re frequently the same.

Oh so I was right! Nice!~

However, I looked it up and found that in some compilers (gcc) memcmp is recognized as a compiler “builtin” that is inlined if it can be, so I’m still wondering if it could be something else

slight correction, on x86_64 you shouldnt expect any registers to be pushed or popped since the arguments will be stored directly in the registers

pleasantly surprised to see Dave Churchill come up. feels like a crossover episode

Hats off for the engaging presentation and the well paced progression in the video.

Fantastic and addictive video with plenty of ideas. The burp was a nice touch too. You've gained a new subscriber !

I truly love your presentation. It's very efficient, just like your code lmao

Some helpful visuals go a long way in illustrating concepts and keeping attention. Your video not only taught me about hash tables, but also about presentation. GJ!

Thanks!

Awesome content, really liked it but way more than I can process in one go.

This is the first youtube channel that I have subscribed in just one video.

really entertaining to watch, you seem so passionate about optimization

great video

This was an amazing video. Enjoyed every second of it. Thank you for sharing your journey!

Fantastic video! Even though I don't know much about low level stuff you made it very easy to understand and follow and got me even more motivated to study more

> got me even more motivated to study more

That's what I like to hear!

I've been working on and off on a simulation where I could use every scrap of performance I can find. I'm not even close to the optimizing stage yet, but I find this video really inspiring. It makes me think, "Hey, maybe I can make this 50x faster", and gets me excited to think of ways to speed it up.

It's fun!

Enjoyed the vid, looking forward to your future content 👍

Excellent video. Super easy to follow. You're great at explaining complex topics. Subscribed!

I think perf tip 6 is why the karatsuba method is more performant than typical matrix multiplication approach taught in school

This was awesome! This video format was really informative, it's like walking through the socratic method for performance.

> it's like walking through the socratic method for performance.

Thank you!

Amazing, thank you for presenting this!

Wow. I learned so much from this vid that I didn't know I NEEDED to know about hash maps. I went from hashes and hash maps being more advance topics slightly outside my current knowledge in Comp Science to feeling like I know the subject inside and out.

I've even learned a lot about assembly and compilers while watching this random vid that came across my timeline!

You, sir, are an excellent teacher. You have gained yourself a subscriber, Sensei! 🙇♂️

As someone who has had the joy of micro-optimizing workloads before, this was a fun journey to take with you!

Loved this. Reminded me of the days when I was a C programmer back in the 80s/90s, rather than just a PHP scripter like I am today 🤣

i actually started with php, then went to java/c to learn about typecasting lol

nowadays i even do more java/ecmascript than php, but i still have my php CLI toolkit for all kinds of shit,

i refuse to use python lol

Writing PHP in 2023? That's brutal

@@JacobAsmuth-jw8uc Still a good language for fast prototyping and general-purpose web development! Lots of great changes have been brought into the language in the past few years. The usefulness of every tool depends on the task at hand, but maybe you had a really bad experience with PHP. Can't fault you for that :)

@@JacobAsmuth-jw8uc Still talking down on PHP in 2023? That's so 2010 😆

This is a gold mine of system optimization ideas. Much appreciated!

Subscribed. Rare combination of a pleasant voice, good recording quality, lively delivery, and concise, fluff-free information. MMM.

Great video, well explained and interesting.

Started this video thinking i know a lot of stuff about hashing, i mean i knew about collision etc. But i didnt just learn a lot more about creating actual hash-functions that are fitted to my data but also the whole branching stuff was insightful. Really really nice to watch thanks.

I'm glad you learned something!

Really grateful for the time you put into this! Very cool.

Incredibly educating video, hope you keep bringing such content

I agree with everyone else mentioning that this didn’t feel like a half an hour video, very (and surprisingly) entertaining and insightful!

It especially doesn't feel like half an hour when you watch it at 2x speed

Incredible video, didn’t even realize it was a 34 minute video until it was over. While explaining the hash table implementation, you also implicitly made a compelling argument for taking assembly seriously. I learned a lot. Thank you!

This was very informative and helped me see the benefits of creating a unique hash function tailored to one's specific dataset. Well, it might not always be needed, but if performance is ever required, sometimes it might actually pan out better than using the default implementation!

Very well done video and I like the way you explain your thinking process.

You should do comparisons against Rust's FxHashMap. The reason SipHash is used as a default is because we assume it might be in a position to suffer a HashDoS attack, but everyone using Rust who wants to actually make their HashMap go fast, including the actual Rust compiler, uses FxHash or another simpler hash function as their hasher.

I did make a custom hash function. I plugged it into C++'s std::unordered_map to show an improvement (8:02). Switching the hash function is something both Rust's and C++'s hash tables support, so I just picked one to demonstrate the idea.

The point of this video wasn't to make Rust's HashMap fast. It was to show how to think about improving performance.

This is very correct. Would be interesting to see C++ unordered_map compared to Rust with a better hash function.

slow clap, thunderous applause, awe, top, i loved the burp.

awesome comparison, explanation and tips! Well done

Fantastic delivery, great content, and cadence was spot on. Well done.

Love this stuff. Mind candy with extra goodness. For the limited domain of a fixed set of keywords, I might look at all two letters in the set, select the two that have maximum entropy and use those two as first guess. If you have a pair that give no collisions for the set, speed ensues.

Nice vid 👍. I remember a talk by Chandler Carruth at cppcon, where he brought a skylake 2U server with him onto the stage, and did a bunch of perf shenanigans, trying to optimise the code at hand.

He works on LLVM team, and spent a lot of time trying to make clang produce that cmov. In the end, he made compiler emit branchless code, and it performed worse!

Turns out the relative performance of cmov vs branches is highly dependent on CPU architecture (in his case, it was a skylake machine). Compilers use a bunch of heuristics and just setting -march=native is enough to make clang emit a branch on the same codebase (which was faster for that case on that micro architecture).

All that got me wondering about what different instructions are better generated for newer / older CPUs. This can be pinned down if you are running on the known hardware (servers, consoles, micro-controllers), but something like compilers run on everything. Looks like there is a point in gathering stats to determine what is the most common -march for the majority of users.

You make a great point about cmov's performance being CPU-specific!

I did try -march=native on both of my machines (x86_64 (Zen 3, 5950X) and AArch64 (Apple M1)). Clang generated cmov (csel) consistently for AArch64, but neither GCC nor Clang generated cmov consistently for x86_64. I did not test on older or newer architectures.

All of the benchmarking was done on a 5950X on Linux with GCC (I think version 11).

One thing I did not research (but should have) is using profile-guided optimizations to see if that would help compilers generate cmov. The compilers would be able to see the lookup hit rate. I suspect they would still try to branch on x86_64 though...

There's also the case of finding the sweet spot. E.g. the shipped exe runs on Haswell or newer, and if you really want to run on old hardware you can get an alternative compiled binary.

That is, at some point the new instructions and optimization assumptions make a critical difference, and beyond that it's just incremental improvements.

But compiling for the absolute worst case of old hardware will perform rather poorly on modern hardware.

This video popped up in my feed. What an excellent find, great and concise content.

I like this video so much, both for content and for the presentation!

Sent to my friends to watch :D

Fun project, with some great insights, but I feel like there is a bit of "don't let perfect be the enemy of good enough" going on here. As a matter of pure learning and fun, it's great....but for most projects people should just use gperf until they've truly squeezed every ounce of optimization out of other parts of the code. Especially for newer/younger engineers, it's so easy to get caught down a rabbit hole like this and not realize you've spent precious time optimizing for 5% because you just had to replace gperf, instead of optimizing for 20 or 30% in parts of the code that are actually unique to what you're writing and where there is no "good enough" commodity solution in play. If you've truly squeezed all the juice out of the lemon elsewhere, then sure, go around replacing the "good enough" solutions with "perfect" ones.

> for most projects people should just use gperf until they've truly squeezed every ounce of optimization out of other parts of the code

I got a 4-5% speedup over gperf with a week or two of work. I'd say that was worth it. (Also I learned some things along the way.) I think keyword recognition is one of the bottlenecks that matter in my program.

Some of my techniques could be incorporated into gperf too.

> Especially for newer/younger engineers, it's so easy to get caught down a rabbit hole like this

Yes, this is a problem. However, engineers need to grow an understanding of algorithms and how their machine works. They need to practice their optimization muscles on easy, self-contained problems, otherwise they can't tackle harder problems.

The point of my video was not "drop down to assembly as soon as possible". The performance tips I listed are not specific to assembly, and most apply to far-from-the-machine languages like Ruby and Scala.

WHAT A THUMBNAIL WOW

Really enjoyed the deep-dive!

SUBSCRIBED !!!

This was interesting and informative and well presented. Nice work!

It also a lot of fun to try profilers like coz and tools like stabilizer (although this one has maybe one fork left that is somewhat active?). But it can really help you not run into dead ends because of random layout changes.

Can't believe this video has less than 1K views. It is so incredibly well made, really enjoyed it!.

now it has more

because most "programmers" nowadays are all about web development and npm installing stuff

@@jma42 what are you talking about, it's been 20 hrs and the video has 11 kviews.

@@peezieforestem5078 thats not my point, and besides, its too little when compared to most typical web dev content.

What I meant is that the topic is not that interesting to the most programmers nowadays cuz they dont do computer science shit.

@@jma42 I don't consider what I did 'computer science shit'. I consider my work closer to engineering. But I guess that's just semantics.

There is plenty of content like this video on CZcams. For example:

www.youtube.com/@CppCon has lots of deep-dive talks.

www.youtube.com/@DaveChurchill has good beginner-friendly talks which aren't just "install this library and you're done!".

www.youtube.com/@MollyRocket has a popular series called Handmade Hero which covers making a game from scratch line-by-line.

www.youtube.com/@fasterthanlime has educational content similar to mine.

You say it's "too little when compared to" something else. But I don't think I'm competing for the same audience as npm bootcamp tutorial videos, and I think CZcams's algorithm treats our content separately.

That was really interesting, thanks for sharing!

Thank you for making such an informative video.

I've recently taken an interest in making code work well and quickly.

I'm in the process of applying some of the advice from this video and from some of my uni courses to make my own code more performant.

Oh man, I am actually deep in this middle of high-performance hashing right now (to build an internalizer that maps known strings into unique integers, very similar to what you're doing). My solution currently looks like this:

Rather than hash the entire string and index into a linear hash table, I constructed a decision tree. It's a lot like the game Guess Who: "Does your person have glasses? Does your person have long hair? Is this your person?". Each node in the tree would represent a pivot decision such as "is string length < 5?", "is char[3] greater than 'h'?". You would then iterate down this tree - which if constructed ideally the lookup would be O(log 2 N) - and at the end of the line the tree would point to some char data "Is this your string?", which you would strcmp on, in addition to the unique ordinal for the string which is your result. Works incredibly well for longer strings and skips the need for a hash function entirely.

How big is your data set?

I can see your solution being more memory-efficient with large data sets, but I suspect the pointer chasing is expensive with small data sets like mine.

@@strager_ about 500 strings with lengths between 1 and 30. I figured if the tree is packed into a linear struct array then the cache locality makes iterating down it fairly fast. And if the data set included much longer strings, my approach should be even faster as it only needs to compare a few characters rather than hashing the entire string.

> my approach should be even faster as it only needs to compare a few characters rather than hashing the entire string.

My approach doesn't hash the entire string. I only hash the first two and last two characters. (This specific approach might not work for your data, but there might be a similar approach that does work.)

You are one of the best programming presenters I've ever run across.

Wow, what a good quality videos on this channel.Thank you strager

10/10 video. Hearing your thought process was very interesting.

Wrt to the speedup by looking at the first character: You're avoiding both the call overhead (if not inlined), and the memcmp loop setup/teardown (even if inlined) in the typical case

very interesting and in-depth video. keep the good work

Very thorough. Amazing performance increase. Well documented video. ❤

Idea for your perfect hash table:

Make a separate hash table for 1-character keys and 2+-character keys, and use a branchless approach (or mask) that selects the appropriate value. This of course involves 2 different approaches, but could avoid the memory waste, and an even more optimized table for 1-character keys.

I didn't have any one-character keys, but if I did, this sounds like a reasonable thing to try.

I instantly subscribed for the thumbnail butts are the coolest

This video is fantastic. Well done 🎉

I don't know why but it was so entertaining seeing your thought process, and I even learned some stuff. Very good content