Huffman Codes: An Information Theory Perspective

Vložit

- čas přidán 8. 06. 2024

- Huffman Codes are one of the most important discoveries in the field of data compression. When you first see them, they almost feel obvious in hindsight, mainly due to how simple and elegant the algorithm ends up being. But there's an underlying story of how they were discovered by Huffman and how he built the idea from early ideas in information theory that is often missed. This video is all about how information theory inspired the first algorithms in data compression, which later provided the groundwork for Huffman's landmark discovery.

0:00 Intro

2:02 Modeling Data Compression Problems

6:20 Measuring Information

8:14 Self-Information and Entropy

11:03 The Connection between Entropy and Compression

16:47 Shannon-Fano Coding

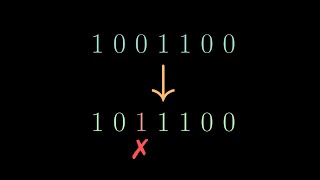

19:52 Huffman's Improvement

24:10 Huffman Coding Examples

26:10 Huffman Coding Implementation

27:08 Recap

This video is supported by a community of Patreons

Special Thanks to the following Patreons:

Burt Humburg

Michael Nawenstein

Richard Wells

Sebastian Gamboa

Zac Landis

Corrections:

At 9:34, the entropy was calculated with log base 10 instead of the expected log base 2,. The correct values should be H(P) = 1.49 bits and H(P) = 0.47 bits.

At 16:23, all logarithms should be negated, I totally forgot about the negative sign.

At 27:24, I should have said the least likely symbols should have the *longest encoding.

This video wouldn't be possible without the open source library manim created by 3blue1brown: github.com/3b1b/manim

Here is link to the repository that contains the code used to generate the animations in this video: github.com/nipunramk/Reducible

Music attributions:

Midsummer Sky by Kevin MacLeod is licensed under a Creative Commons Attribution 4.0 license. creativecommons.org/licenses/...

Source: incompetech.com/music/royalty-...

Artist: incompetech.com/

Luminous Rain by Kevin MacLeod is licensed under a Creative Commons Attribution 4.0 license. creativecommons.org/licenses/...

Source: incompetech.com/music/royalty-...

Artist: incompetech.com/

This video put together a variety of great resources on information theory, data compression, Shannon-Fano codes, and Huffman codes. Here are some links that I found most helpful while doing research.

A great resource on Shannon's source coding theorem (with the proof): mbernste.github.io/posts/sour...

Huffman's original paper: compression.ru/download/articl...

Great chapter on Information Theory motivating various data compression ideas: www.princeton.edu/~cuff/ele20...

Great book for further reading: Data Compression by Khalid Sayood

Algorithmic perspective on Huffman Codes: Section 5.2 of Algorithms by Papadimitriou et al

More elaboration on discovery of Huffman Codes: www.maa.org/sites/default/fil...

A really nice resource on data compression and information theory: www.ece.virginia.edu/~ffh8x/mo...

Implementation of Huffman Codes: www.techiedelight.com/huffman...

I love the stories where students solve unsolved problems just because the professor neglected to tell them it was difficult.

Me too, those are the best stories.

"Just call it entropy, nobody really knows what it is" Truly an intelligent man.

I think either John Von Neuman was joking or just being a dickhead

10:59 yea what they mean is that entropy seems to be what they are explaining, and if someone disagrees, you can ask them, make a mathematical proof (different to ours) that defines "entropy". what Shannon found I think is entropy. but sadly I don't think @Reducible is explaining it quite right. Entropy and prediction should not be in the same sentence. Entropy is looking at data/environment and asking how much information can be used to define this information. high entropy is when the information cannot be described with less information. repeating patterns of course the easiest defined by less information the the original information.

Prediction (inference-ing) has a time/event element to it. Or if you did approach a repeating pattern it doesn't look at the whole pattern and is always asking from what I can see of the pattern so far what would be the next part of it? something is not very predictable if you can't guess what comes next. it sounds the same but really should be careful to overlap these ideas.

The section leading up to 8:00 epitomizes a problem solving technique that sets apart mathematicians: rather than directly search for a formula that describes a thing, instead list what *properties* you expect that thing to have. Make deductions from those properties, and eventually all kinds of insights and formulas fall on your lap.

Would encoding something in Base Three (using either "0, 1, 2" or "0, 1, .5" ) increase the amount of information transmitted because there's an extra number or decrease the amount of information transmitted because you can make more complicated patterns?

@@sixhundredandfive7123

Base three (or any alphabet of three symbols) can encode more information *per symbol*, which means fewer symbols are needed to represent some information (a message). That would mean messages are shorter but have the same overall amount of information.

Yeah that's also how you actually obtain the determinant, the most striking example to me. You prove that the 'set' of all alternating (swapping two rows makes it negative) multilinear (breakup of the determinant based on the row property) forms on matrices (with elements belonging to a commutative ring with identity), and which outputs 1 for the identity matrix is a unique function, the determinant.

From this definition you can obtain a nicer result that is every alternating n linear form D on n n-tuples which give the matrix A on stacking must satisfy

D(A) = detA D(I). This is used to prove a lot of properties concerning determinants.

The legend is back. I love your work, the production quality, the content, everything! You are the computer science equivalent of 3b1b.

Hopefully not the equivalent of the 3b1b who apparently stopped making videos

@@muskyoxes looks like he is that too lol

@@muskyoxes b...but he still makes videos...

The wisest thing Huffman’s professor did was not mention it was an unsolved problem.

Great, clear presentation, others on youTube are so fast they require you to pretty much already know how it works before you watch it, which is insane.

👌🏽

20:26, "optimal binary representation must have the two least likely symbols at the bottom of the tree"

My first thought: "So can we count it as one node and repeat the process? Nah, that can't be right, that would be too easy"

XD turns out it really is easy

You actually need to proof that you may build node with two of those. Also, author of video didn't prove why they should have longest number of bits, it's not so obvious, you need to apply exchange argument, most basic one though.

At that moment I thought "just apply the same process recursively" which turns out to be the case

Having also learned the Huffman encoding algorithm as an example of a greedy algorithm (along with its accompanying greedy-style proof), this video provided me with a new perspective which, combined with the interesting history of alternative algorithms, gave me a fuller understanding and appreciation for the topic, which I have to admit is extremely well presented!

Your presentation is entertaining, thought-provoking and truly educational. One of the best channels on CZcams in my opinion.

The Huffman encoding algorithm looks very similar to dynamic programming. So I wondered which came first, and it seems that they where both development around the time, namely around the year 1952.

Yea I googled and apparently, this is a greedy algorithm that converges to the global optimal. Greedy algos and dynamic programming are similar in that they utilise the subproblems to build up the full solution. In general greedy algorithms only converge to local optimizers as they do not exhaustively check all subproblems and is faster. DP is slower but global optimality is guaranteed. For this problem the greedy algo guarantees the global optimal and we have the best of both worlds.

This video is an amazing compilation of information, in a well presented order and in an engaging way. Information well encoded! Cheers

The smallest shift in perspective can sometimes make all the difference

This is very impressive. It takes a lot of hard to present so much so well. Excellent vid!

I just recently learned about Huffman encoding and this video is absolutely AMAZING. You really motivated the solution incredibly well! Congratulations and hope you make more videos

I really love this algorithms that look so obvious and simple yet I know that even with all the time on the world I couldn't have invented them myself.

Huffman encoding is only optimal if each symbol is conditionally independent of all previous symbols, which is almost never the case, which is why Huffman encodings didn’t solve compression, though they are typically used as part of a broader compression algorithm.

Ray Solomonoff did a lot of important work in this area with his invention of algorithmic complexity theory

Ooh interesting. In the equation constraints section it was mentioned (almost as just a side note) that the events had to be independent of each other, and for a moment I wondered what if they weren’t independent? Now I want to know :0

@@redpepper74 You can compress a lot more when the symbols are conditionally dependent upon previous symbols, but you need to use other methods, typically those that rely upon conditional probability

@@redpepper74 Huffman encodings are based purely on symbol frequencies, and don’t take into account what symbols came before. So for example, if you see the sequence of symbols “Mary had a little” you can immediately recognize that the next four symbols are very likely to be “lamb”, with a high degree of probability. Huffman codes do not see this

@@grahamhenry9368 I suppose what you could do then is get a separate Huffman encoding table for each symbol that tells you how likely the next symbol is. And instead of using single characters you could use the longest, most common groups of characters

@@redpepper74 Yeap, I actually coded this up once, but only looking back at the last character. The important idea here is that the ability to predict future symbols directly correlates with how well you can compress the data because if a symbol is very probable then you can encode it with fewer bits via a Huffman encoding. This shows that compression and prediction are two sides of the same coin. Markus Hutter has done a lot of work in this area with his AI system (AIXI) that uses compression algorithms to make predictions and attempts to identify optimal beliefs

Really loved it! It was knowing about Huffman codes that made me take information theory classes. It is really an elegant piece of mathematics.

Incredible educative video, I respect the amount of work you put into this! I enjoyed the overview of the field and the connection between information and probability theory was splendidly shown! I'm looking forward to your future videos!

Best explanation of Huffman Encoding I've seen. Bravo!

Man it's so amazing that any of this works at all. Being an autodidact philomath and self educated amateur mathematician and cryptographer, I am so glad to have all of this information available to learn at my own pace. Thank you.

This has got to be the most amazing video I have ever seen. Seriously! It was absolutely the most intriguing thing I have never pondered. Great explanation skills for slow people like me but yet very entertaining at the same time. Thank you sir for your effort and information!

Your voice is great, the visuals are on point, and I finally understand Huffman codes. Great job; subscribed!

Just yesterday I wondered when you'll upload next. Awesome video as usual!

I'm taking numerical analysis course right now, and my chapter was just on Huffman Codes, great timing!

Great video. I really appreciate the hard work and explanations you put in your videos.

Thx so much, being a cs student, I really appreciate this video. This is exactly what i was looking for

As always, amazing quality of a video!! loved it. Please keep doing them

How am I just finding this channel? This guy’s awesome

Lovely explanation and storytelling!

This was so fun to watch. Please keep making more videos.

woahhhhhhhhhh 🔥🔥🔥🔥🔥🔥 I absolutely have no words for this masterpiece. The way you explained it, now I'm going to take my information theory classes seriously 😂 Thanks a lot. Great work! Sharing this with my friends as this deserves lot more appreciation ♥️

Very good video. That's the first one I see on youtube that put together Shannon-Fano and Huffman coding and tries to explain the differences between them. Most of them only explain the Huffman algorthm and discard all the history behind it

Brilliant video! Thanks for your wonderful effort.

This channel is pure gold

Many years ago after studying this algorithm I sent a fan letter to D. Huffman. He wrote back in appreciation because he'd never gotten a fan letter before.

What an exceptional video , that was so fun !

Thank you for keeping it precise but also intuitive!!!

Yoooo, your videos are awesome, great day when you upload

The relation between uncertainty and information really blown my mind, and can really see how compression works. its like asking: "Would you like us to message you everytime its sunny in the sahara desert, or when its raining in the sahara desert" and because you know that the probability of a sun is much higher than rain, you would get practically the same amount of information by choosing the same option but with much less messages.

Wow. Great video!

The way you explained it was so clear and succinct that I guessed "Oh shit it's recursion!!" at 23:12. Good work on the organisation of explaining topics & pacing, love this channel!

Great video. Though I was a bit confused in the end and going further in depth with an example of using the huffman encoding and decoding, while comparing it to uncompressed encoding would make it a bit clearer. Still an amazing video!

man I love this channel. so well made.

21:09 S_1 S_2 don't HAVE to be on the same node on the optimal tree, in fact they can be exchanged with any other nodes on the bottom layer. What is instead true is that you always can construct an optimal tree with them paired from one where they are not through the aforementioned exchange.

Dude long time no see.. I am so happy that you are back. Please make more videos...

There was a lot of information in that video, and I could decode it all! 🙂

It is definitely not easy to explain something so well, so thank you.

Holy!! Thanks for the journey and nice video! :D

I think there's a missing negative sign in the lengths at 16:11,anyway one of the best videos on the topic, I subscribed awhile ago and i don't regret it .

Great explanation. I appreciate your efforts very much.

People be sleeping on this channel. Incredible content.

I've watched many of your beautiful presentations and found them very instructive. This one not only clearly demonstrates Huffman's idea , but also the "bottleneck" in any communication channel formalized by Shannon himself.

I'm only just starting to learn about Information Theory - but this was very accessible. Thanks, subscribed.

Nice, very clear on the logic.

Guy, thank you for this amazing video.

You broke this down soooo well

This is great! Amazing work

Excellent explanation! Thanks!

This is really good ! Thank you ! I learned a lot.

Outstanding, as always.

Thank you for this video. Very informative !

Amazing work

Thanks for this awesome explanation !!!

Congratulations for posting an excellent info content!

Great video, thanks!

Great stuff, thanks for sharing!

Underrated channel

wow, very well explained. great job sir

awesome! thank you very much for the explanation

Amazing video. You are very good at this.

Thank you, it's really helpful!

This is truly informative.

Awesome video !!!

The Huffman algo is so brilliant...

GIF's LZW is another system that sounded obscure until I dared looking and it was in fact much simpler than I thought. It would be a great subject for your next videos.

CZcams algorithm usually sucks, just put on your feed some shitty latin music or just the stupid trending stuff, but today feels generous, long long time ago that I don't see content like this, man keep going, you have an incredible way to explain things, simple but clear and clever, amazing channel pal

Great as always!

I already knew huffman's algo before watching this video....6:53 had me goosebumps :) i mean what---?!?!?!? the correlation omg ... tysmmm for this _/\_

great video man

content on your channel is awesome !!!

23:40 I have seen other videos about the Huffman tree construction before watching this video, but this explanation is clearest. I was not sure how the nodes after the initial two nodes should be added.

Very great channel.

10:50 JvN's second and most important reasoning for the term _entropy_ is truly the genius of a mad mathematician.

Amazing explanation :)

Yayyy, new videos, so exited

27:05 I think it would be more eye-pleasing if the codes were written in a monospace font.

incredible, thank you

Wow this was great!

Thank you sir because of your vedio I learned how uncertainty is compressed in our nature

Great video

Awesome work :D

eagerly waiting!!!!!!!!!!!!!

Good visual explanation. But the part actually encoding a message into bits an decoding the message was completely missing. So while the method how we get the optimal encoding is clear, how to apply the Huffman encoding is not explained at the end. Also, this compression rate applies only in the limiting case (infinite stream lengths) where sending the distribution (tree) to the decoder is negligible. In real data compression the compressed stream, file or block must include the distribution as well.

Good feedback, thank you. These details were part of the script at one point, but I kind of went back and forth on whether I should include it in the final version of the video. I decided to not include them to maintain focus on the actual motivation of Huffman codes, but thank you for bringing these details up in this comment. They are indeed an essential part of doing this in practice.

@@Reducible maybe in a follow-up video you can explain how you would implement this in practice and maybe how its done in the real world like the Deflate algorithm

I completely disagree with this critique. There are already a lot of videos showing how to compute Huffman codes and plenty of tutorials on programming an encoder/decoder. This focuses on the what is missing from the usual teaching (you could say it maximizes information entropy): an intuitive, high-level overview of how the code evolved, where it came from, what it means, etc., as well as beautiful visuals. Usually, videos on these kinds of topics are dry, textbook descriptions with nothing but formulas and step-by-step instructions on computation. I also think that when being introduced to new ideas, introducing too many practical, real-world considerations can muddy the waters and make things really hard to understand. Once a good understanding is in place, then as you introduce complexity, it makes sense where it is coming from and why it matters.

@@atrus3823 It was not a video premise to show how to compute them, but a brief explanation of decoding would have been nice.

I disagree, he did explain how to turn binary trees into bit representations at the start, while this wasn't done in the code example, in my opinion, that's fine, it's easy enough to figure out, i.e. assign 0es to left branches, assign 1s to right branches (or vice-versa), since the Node class is keeping track of what's on right/left, it really wouldn't be hard to get the binary representations, and including this in the video would just be distracting and not as important to understand how the algorithm works.

Wow, great video man! I forgot multiple times that you aren't 3b1b

The day Reducible uploads is a good day

omg!! Perfect content!

I really enjoy your videos :)

This was a really cool watch! The more I immerse myself into information theory the more it interests me :D

I do disagree with the part about the importance naming things though- names have power! The roots in a word tell you what it means, the meanings of a word in some contexts hint at the meaning in others, and the connotation that a word has affects the way that people think about it. (Yes my passion for writing clean code has turned me into someone who thinks way too much about naming conventions :P )

On the other hand, words can develop meanings well beyond their origins. Also, even the original meaning doesn't always perfectly correlate to the roots. It just isn't as simply as you claim.

thanks for the video

This data compression algorithm presented in this video, reminds me one of a Recursive Neural Network paper contain in the one of Yannic Klicher videos.

You are back.. 🥳🥳🥳✨✨✨✨

I am watching from last week approx it grow from 89k to 91.5k

Hey its me Huffman. I made these codes.

YOOOOW AN UPLOAD

I wish I could like this twice.

this is awesome