LLM Control Theory Seminar (April 2024)

Vložit

- čas přidán 4. 06. 2024

- Stay tuned for our new results in our preprint, "What’s the Magic Word? A Control Theory of LLM Prompting": arxiv.org/abs/2310.04444

Follow twitter and aman-bhargava.com/ for further updates :)

- Aman's twitter: / abhargava2000

- Cameron's twitter: / witkowski_cam

- Looi's twitter: x.com/Sz_Looi

Thank you to the Information, Geometry, and Physics Seminar run by Juan Pablo Vigneaux for the opportunity to present our work. For a full reference list, see our preprint.

0:00 Introduction

1:51 1: MOTIVATIONS

1:55 Zero-shot learning miracle (Motivations)

3:28 Background on LLMs? (Motivations)

4:22 Transformer Information Flow for Generative Inference (Motivations)

6:03 LLMs are increasingly used as systems (Motivations)

6:42 We do not understand LLMs as systems (Motivations)

9:23 Control theory is great for understanding systems (Motivations)

11:36 Prompt engineering = system control problem (Motivations)

14:12 LLM systems get complicated fast (Motivations)

15:16 Plan for LLM Control Theory (Motivations)

16:58 2: FRAMEWORK

17:01 LLM systems are unusual (Framework)

18:44 LLM system formalization (Framework)

21:43 Reachability (Framework)

23:43 Reachable Sets (Framework)

25:11 k-epsilon Controllability (Framework)

27:29 3: SELF-ATTENTION CONTROLLABILITY THEOREM

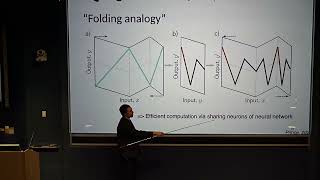

27:40 Background on self attention (Theorem)

31:23 Controllability for self-attention (Theorem)

32:20 Theorem (Theorem)

40:23 Connecting self-attention theorem with empirical results (Theorem)

41:13 EXPERIMENTAL RESULTS ON LLM CONTROLLABILITY

41:24 Measuring k-epsilon controllability via prompt optimization (Experiments)

42:58 Experimental design for k-epsilon controllability measurement (Experiments)

45:15 Results on k-epsilon controllability (Experiments)

48:36 OPEN QUESTIONS IN LLM CONTROL THEORY

49:57 Typical sequences, AEP

50:58 More next steps

52:21 Acknowledgements - Krátké a kreslené filmy

Excellent presentation, was a blast working on this together last summer! :D

terrific piece of work!

Many thanks for sharing

Really enjoyed this, great work, and very insightful.

Amazing work and a very enjoyable presentation!

Please run this through Adobe Podcast AI, you will get studio quality sound from this noisy track.

Absolutely brilliant. I was thinking along similar lines a few months ago from the perspective of an "Ideal Language Model" and working backwards from there. The direction you have taken progress made is really outstanding!

Thank you!!!

the best timestamps ever

Great talk, I liked it!

Wow! what is this! wow... very interesting content!!! Thanks

Excellent talk

Crazy original work haha, love it!

Great job young man, bright future!

This was wonderful.

That's awesome!

what a great talk!

Thanks

Very insightful. Starting from this project, I hope that we can control all the AI architecture.

Very cool and clear!

great talk!

Let's slap PID controller on top of LLM and see where it get us😁

I think there should be a deeper interrogation with regards to the functions part with great emphasis of category theory and other mathematical concepts with regards to functions such that there be no contradiction with the broader general frame of mathematics, in my preferrence random matrices analogy is more closely aligned with llms, but awsome work read the paper, cool hair too, 😉

is this about control vectors being embedded into each layer which helps shape the output?

50:10 : so, if A^{(n)}_\varepsilon is the set of sequences of length n which have a probability greater than \varepsilon under P_\theta , where P_\theta is the distribution that the model usually produces (...when it doesn’t have the controlling prompt?)

Then, if you want to control the distribution of output sequences, then, I guess you would want to make the sequence that is produced lie in A’^{(n)}_\varepsilon which would be defined similarly, but in terms of a desired probability distribution Q instead of the P_\theta ? Is that correct? Or, perhaps I’m misunderstanding

nice

Would've liked to hear the rest of the Q&A. Do you think Wikitext is appropriate for this kind of exercise since it was probably part of the training and therefore easily reachable?

An important take away of this is you can use relatively short u to achieve the desired output, meaning for certain use cases, simple exhaustive (or gradient) prefix prompt tuning can achieve better performance (not really a secret but the fact that k ~ 10 is surprising).

Great question! Wikitext being in the training data was the main motivation to determine the reachability to the top-75 target output tokens (according to P_{LM}(y | x_0)) and the random target token outputs (uniformly sampled from V).

I plan to expand the set of experiments/data sources shortly, any ideas on good alternatives to Wikitext for sampling x_0, y pairs?

@@lupita3689 I see. So what is the u for Roger Federer is the greatest kangaroo?

@@amanb2000 Not too familiar with these smaller models and their respective training sets, but I wonder if you could try to use any arbitrary training data using adapters and test this much more broadly, or at least see if this framework can lead to techniques in more practical applications.

@@Hotmedal I don’t think the u of this specific example was detailed in the paper, depends on if it’s in the list of possible tokens I guess?

They really need to capture the audio directly from the speaker's mic into recording. The double recording is scratchy. Great video though! :)

Basically he is teaching rnn in his word

As a non-phd student who does not have a full grasp of how these systems work I am curious. Is it possible to train a model to be able to accurately represent its internal structure in it's outputs?

I've been playing with fine tuning, and I'm wondering if there is even a way to train a model to output accurate information about the internal state of the model itself

This paper might be relevant arxiv.org/pdf/2305.18449

I haven't heard of anybody explicitly fine-tuning the output of the model to reflect accurate information about the internal state of the LLM. It's an interesting idea!

One more question, do you think that it's theoretically possible to find an optimal sequence of tokens that would get a transformer large language model to reprint a response that is analogous to its previous response. Basically a fundamental way to 'communicate' to the model to run the token input of the last response?

Just in a an abstract way, do you think that might be possible?

I'm here for a reason. Wow here's a fun idea to integrate into control theory:

How do the dynamics evolve between AI architects who design on the fly websites based on basic building blocks of the site already present in the code base and AI agents designed to be autonomous generally across the space of websites, and also specialized on the AI architect's pages, considering the conditioning on both Human's needing to use the site, and no human's need to use the site?

Isnt reachability similar to a GAN discriminator

Why is this prompt not analyzed? "Please complete this sentence with the word "kangaroo": Roger Federer is a" ? Or am I missing something? Isn't that shorter than the story?

Give it a try control.languagegame.io/inference

Everything’s a function 😴

Always has been

Nope, relations are not functions. One input to two outputs is not allowed.

@@shoopinc formally sure, I would call that a well-behaved function. But I mean in general any process can be interpreted as a function. Moreover LLMs will definitely give you many different outputs for the same exact input lol.

@@shoopincWhile that *is* true, a relation can be described by a function from the product of the domain and codomain of the relation, to {0,1} , sending (a,b) to 1 if a R b, and to 0 otherwise...

@@shoopinc you can trivially generalize this by making outputs have a field called "datetime" - we then map functions(inputs) -> some set of outputs

Nothing like grandma’s bioweapon recipe.